The Fight Against AI Plagiarism: Weaponizing subtitles

Table of Contents

- 1. The Fight Against AI Plagiarism: Weaponizing subtitles

- 2. Weaponizing Subtitles: A Creator’s Fight Against AI Plagiarism

- 3. A Conversation with [f4mi]

- 4. The Ethical Tightrope of AI: Exploring the “Poisoning” Technique

- 5. How can creators utilize .ass subtitles to deter AI-powered plagiarism?

- 6. Weaponizing Subtitles: A Creator’s Fight Against AI Plagiarism

- 7. A Conversation with [f4mi]

Remember the wave of excitement around generative AI and large language models a few years ago? It seemed like these tools were about to revolutionize everything. But in reality, their impact has been more subdued. Outside of specific niches, these models haven’t quite lived up to the hype, often consuming vast resources while contributing to the proliferation of low-quality content online. One of the most pressing issues is the rampant plagiarism perpetrated by these AI systems. Thay churn out derivative works based on existing text, audio, and video, making it increasingly difficult for creators to protect their intellectual property.

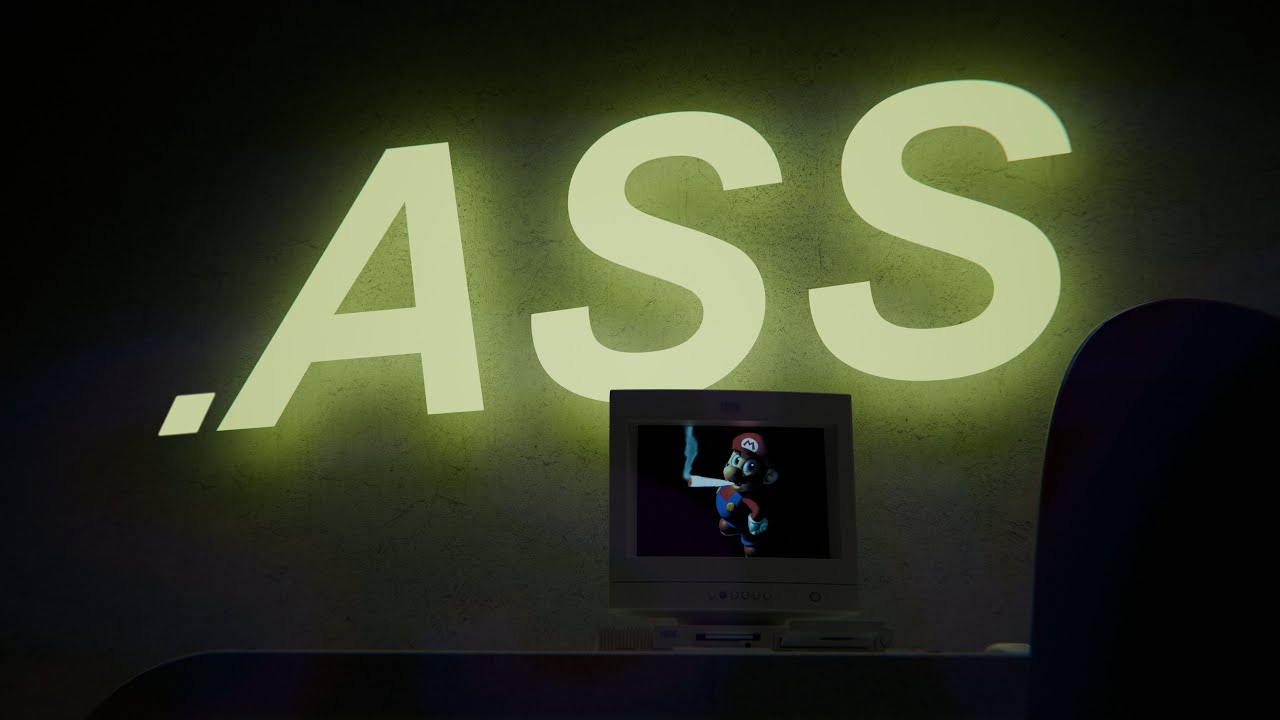

On YouTube, a new type of AI plagiarism has emerged.Malicious bots download a video’s subtitles, feed them into an AI model, and then generate an entirely new video based on the original work. While standard subtitle formats like .srt primarily contain timing and text information, a more advanced format called Advanced SubStation Alpha (.ass) allows for extensive customization, including font styles, colors, and even visual effects.

YouTuber [f4mi] made a startling revelation: this flexibility could be weaponized against AI. By embedding extraneous, hidden text within .ass subtitles, they could “poison” the data these bots ingest. this garbage text effectively camouflages the actual subtitles, making it difficult for the AI to discern meaningful content from noise.

“It’s a great way to ‘poison’ AI models and make it at least harder for them to rip off the creations of original artists,” [f4mi] explains. And their experiments confirmed this strategy’s effectiveness.

“We’ve actually seen a similar method for poisoning datasets used for emails long ago,” notes the article, drawing a parallel to past efforts to thwart automated surveillance by introducing “scaremail” into email datasets.

This creative countermeasure highlights the ongoing battle between creators and increasingly sophisticated AI systems. As AI technology evolves,so too will the strategies used to protect creative work and ensure ethical development and deployment of these powerful tools.

Weaponizing Subtitles: A Creator’s Fight Against AI Plagiarism

The battle against AI-generated content theft has taken an unexpected turn, with online creators finding ingenious ways to outsmart plagiarism bots. YouTuber [f4mi] recently made headlines for discovering a method to “poison” the data these bots rely on, utilizing a technique inspired by the age-old “scaremail” tactic used to combat spam filters. This innovative approach has ignited a passionate debate about the evolving fight for creative integrity in the age of artificial intelligence.Archyde caught up with [f4mi] to delve into this captivating new battleground.

A Conversation with [f4mi]

Archyde: Yoru discovery about using .ass subtitles to “poison” AI plagiarism bots has generated a lot of buzz. Could you explain how this technique works for our readers?

[f4mi]: It’s all about leveraging the extra capabilities of advanced subtitle formats like .ass. while standard formats like .srt focus primarily on timing and text, .ass subtitles allow for much more – font changes, colors, even visual effects.This means you can embed extra, seemingly invisible text within them that goes unnoticed by human viewers. When plagiarism bots download these “poisoned” subtitles and feed them into their AI models, the hidden text throws off the algorithm, creating confusion and making it harder for the AI to discern the actual content.

Archyde: This sounds like a clever workaround. But is it actually effective? Have you seen any evidence that it deters AI-powered plagiarism?

[f4mi]: My experiments have definitely shown promise. The bots struggle to process the “poisoned” data accurately, resulting in significantly lower quality output. It’s not a foolproof solution, and AI is constantly evolving. Tho, it’s a powerful tool to at least make the plagiarism process more difficult for these bots.

Archyde: You mentioned that this method is reminiscent of “scaremail” used to combat spam filters. do you see this as a sign of an ongoing arms race between creators and AI technology?

[f4mi]: Absolutely. as AI becomes more sophisticated, we’ll need to come up with increasingly creative ways to protect our work. It’s a constant back and forth, a kind of creative tug-of-war. In this battle, ingenuity and adaptability will be key.

Archyde: What message do you have for other creators who are concerned about AI plagiarism?

[f4mi]: Don’t despair! There are things you can do to safeguard your work. Stay informed about the latest developments in AI technology, explore new tools and techniques, and don’t be afraid to get creative in your defence. This is a challenge we all face, and by working together, we can find solutions and ensure that creative expression thrives in this evolving landscape.

The Ethical Tightrope of AI: Exploring the “Poisoning” Technique

Artificial intelligence is rapidly transforming our world, automating tasks and offering innovative solutions. However, this powerful technology comes with inherent risks, and one particularly concerning issue is the concept of “AI poisoning.” This malicious technique involves manipulating AI models during their training phase, subtly introducing biases or errors that can lead to unpredictable and potentially harmful outcomes.

Imagine an AI system designed to detect fraudulent transactions. If an attacker successfully poisons this system, it might start flagging legitimate transactions as fraudulent, causing financial losses and damaging customer trust.

The potential consequences of AI poisoning are vast and far-reaching. It could be used to spread misinformation, manipulate public opinion, or even compromise critical infrastructure. As we rely more heavily on AI systems in various aspects of our lives, understanding and mitigating this risk is crucial.

While the development of robust safeguards is paramount, it’s essential to engage in open discussions about the ethical implications of AI. As one expert aptly put it, “Do you think this ‘poisoning’ technique could be misused?” This question serves as a crucial reminder that we must tread carefully as we navigate the uncharted territory of artificial intelligence.

How can creators utilize .ass subtitles to deter AI-powered plagiarism?

Weaponizing Subtitles: A Creator’s Fight Against AI Plagiarism

The battle against AI-generated content theft has taken an unexpected turn, with online creators finding ingenious ways to outsmart plagiarism bots. YouTuber [f4mi] recently made headlines for discovering a method to “poison” the data these bots rely on, utilizing a technique inspired by the age-old “scaremail” tactic used to combat spam filters. This innovative approach has ignited a passionate debate about the evolving fight for creative integrity in the age of artificial intelligence.Archyde caught up with [f4mi] to delve into this captivating new battleground.

A Conversation with [f4mi]

Archyde: Yoru finding about using .ass subtitles to “poison” AI plagiarism bots has generated a lot of buzz. Could you explain how this technique works for our readers?

[f4mi]: It’s all about leveraging the extra capabilities of advanced subtitle formats like .ass.while standard formats like.srt focus primarily on timing and text,.ass subtitles allow for much more – font changes,colors,even visual effects.This means you can embed extra, seemingly invisible text within them that goes unnoticed by human viewers. When plagiarism bots download these “poisoned” subtitles and feed them into their AI models, the hidden text throws off the algorithm, creating confusion and making it harder for the AI to discern the actual content.

Archyde: This sounds like a clever workaround. But is it actually effective? Have you seen any evidence that it deters AI-powered plagiarism?

[f4mi]: My experiments have definitely shown promise. The bots struggle to process the “poisoned” data accurately, resulting in significantly lower quality output. Its not a foolproof solution, and AI is constantly evolving. Tho, it’s a powerful tool to at least make the plagiarism process more difficult for these bots.

Archyde: You mentioned that this method is reminiscent of “scaremail” used to combat spam filters. do you see this as a sign of an ongoing arms race between creators and AI technology?

[f4mi]: Absolutely. as AI becomes more sophisticated, we’ll need to come up with increasingly creative ways to protect our work. It’s a constant back and forth, a kind of creative tug-of-war. In this battle, ingenuity and adaptability will be key.

Archyde: what message do you have for other creators who are concerned about AI plagiarism?

[f4mi]: Don’t despair! There are things you can do to safeguard your work. Stay informed about the latest developments in AI technology, explore new tools and techniques, and don’t be afraid to get creative in your defence. this is a challenge we all face, and by working together, we can find solutions and ensure that creative expression thrives in this evolving landscape.