Microsoft Takes Legal Action Against “Hacking-as-a-Service” Scheme Exploiting AI Platforms

Table of Contents

- 1. Microsoft Takes Legal Action Against “Hacking-as-a-Service” Scheme Exploiting AI Platforms

- 2. Unmasking a Complex Cybercrime Operation

- 3. How Were Accounts Compromised?

- 4. What This Means for AI Security

- 5. How Does Microsoft Plan to Prevent Similar AI platform Exploitation in the Future?

- 6. How Were Accounts Compromised?

- 7. What This Means for AI Security

- 8. How Does Microsoft Plan to Prevent Similar AI Platform Exploitation in the Future?

- 9. Microsoft Takes Legal Action Against Sophisticated AI Exploitation Scheme

- 10. The Scheme: A Pay-to-Use Platform for Harmful Content

- 11. Uncovering the Operation

- 12. The Challenge of Unmasking the Defendants

- 13. What Makes This Scheme Unique?

- 14. Broader Implications for AI Safety

- 15. Conclusion

- 16. Microsoft’s Stand Against Cybercrime: Insights from Steven Masada

- 17. A Clear Message to Cybercriminals

- 18. Practical Advice for Businesses and Individuals

- 19. A Collaborative Effort for a Safer Digital World

- 20. How can other tech giants learn from Microsoft’s response to this AI-related security incident to better prepare for and mitigate future threats?

In a decisive move to safeguard its AI-driven technologies, Microsoft has initiated legal proceedings against three individuals accused of running a “hacking-as-a-service” operation. This scheme allegedly allowed teh production of harmful and illegal content by circumventing the protective measures Microsoft had put in place to prevent misuse of its generative AI tools.

Steven Masada, assistant general counsel for Microsoft’s Digital Crimes Unit, stated that the defendants, located outside the U.S., created specialized tools to bypass these safeguards. They reportedly infiltrated legitimate customer accounts and combined these breaches with their tools to establish a pay-to-use platform for generating prohibited content.

Unmasking a Complex Cybercrime Operation

Microsoft’s legal action also targets seven additional individuals believed to have utilized the illicit service. All ten defendants remain unidentified and are referred to as “John Doe” in court documents. The lawsuit, filed in the Eastern District of Virginia, aims to dismantle what Microsoft describes as a “refined scheme” designed to exploit generative AI services.

“By this action,Microsoft seeks to disrupt a sophisticated scheme carried out by cybercriminals who have developed tools specifically designed to bypass the safety guardrails of generative AI services provided by Microsoft and others,” the company’s legal team wrote in the complaint.

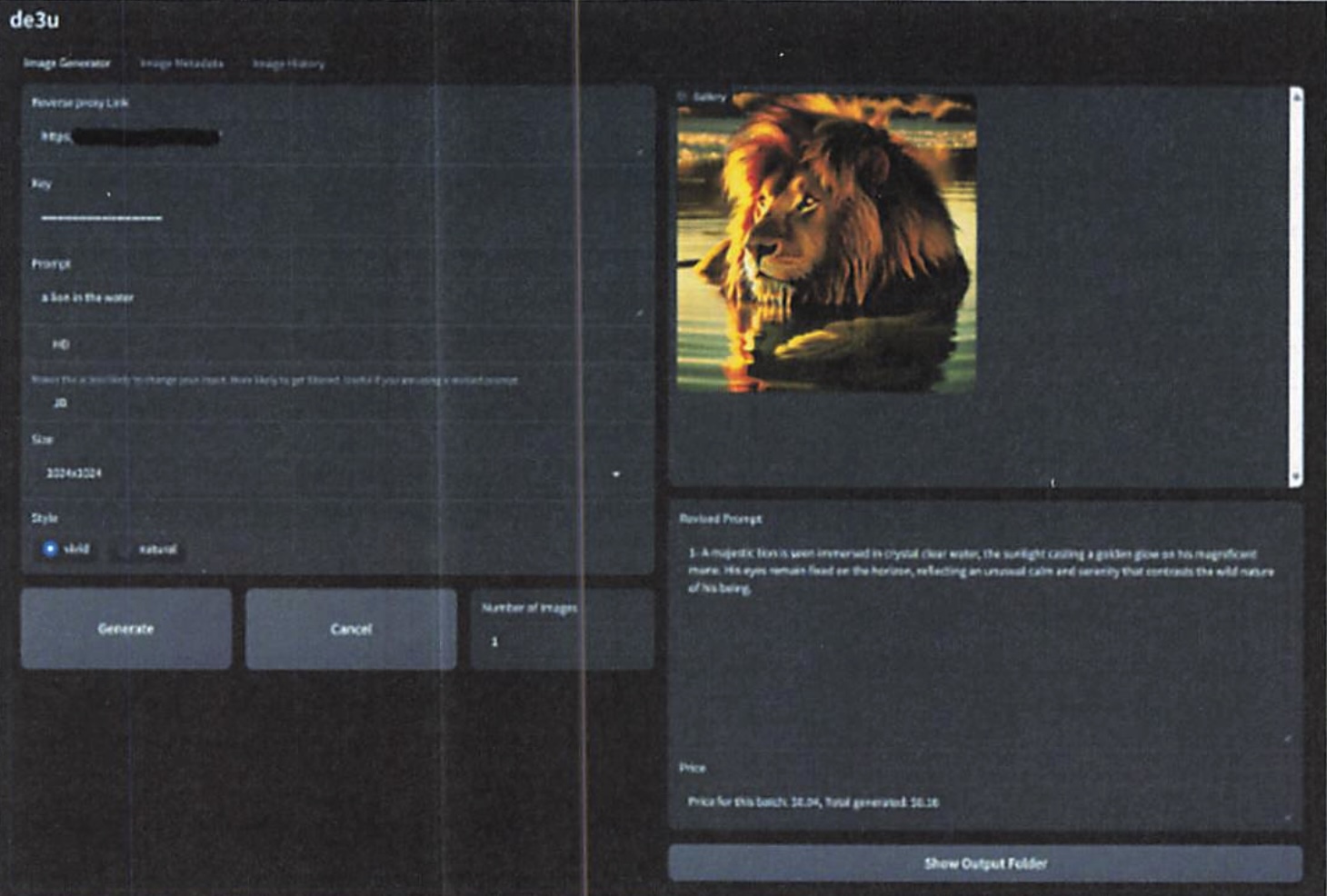

The service, operational from July to September 2024, was hosted on a now-defunct website, “rentry[.]org/de3u.” It provided users with step-by-step instructions on how to generate harmful content using custom tools. A proxy server facilitated dialog between users and Microsoft’s AI services, leveraging undocumented APIs to mimic legitimate requests. Compromised API keys were used to authenticate these requests, further masking the illicit activity.

How Were Accounts Compromised?

The defendants allegedly gained access to legitimate customer accounts through various methods, including phishing and credential stuffing.Once inside, they used these accounts to generate API keys, which were then employed to authenticate requests to Microsoft’s AI services.This allowed them to bypass the company’s safety mechanisms and produce harmful content.

What This Means for AI Security

This case highlights the growing challenges in securing AI platforms against sophisticated cyber threats. As generative AI technologies become more prevalent, the need for robust security measures and proactive legal actions becomes increasingly critical. Microsoft’s lawsuit underscores the importance of safeguarding AI systems to prevent their misuse for malicious purposes.

How Does Microsoft Plan to Prevent Similar AI platform Exploitation in the Future?

Microsoft has announced plans to enhance its AI security protocols, including the implementation of more advanced monitoring systems and stricter authentication processes. The company is also collaborating with law enforcement agencies and other tech firms to share intelligence and develop best practices for preventing similar exploits in the future.

How Were Accounts Compromised?

Microsoft has not revealed the specific techniques used to infiltrate customer accounts, but the company pointed to a recurring issue: developers accidentally embedding API keys in publicly available code repositories. Despite repeated advisories to eliminate sensitive information from shared code, this risky practice continues, leaving systems vulnerable to attacks.

Additionally,microsoft indicated that unauthorized network intrusions might have contributed to the credential theft. This incident highlights the persistent struggle tech giants face in defending their platforms against ever-evolving cyber threats.

What This Means for AI Security

This case underscores the dual nature of generative AI.While these tools hold tremendous promise for innovation, they also open doors for misuse. Microsoft’s proactive legal steps serve as a stark reminder of the critical need for strong security protocols and constant vigilance in today’s digital landscape.

As AI technology advances, so must the defenses that shield it. For developers and businesses alike, this incident is a wake-up call: prioritize security, rigorously review code, and stay alert to emerging risks.

How Does Microsoft Plan to Prevent Similar AI Platform Exploitation in the Future?

In an exclusive interview with Steven Masada, Assistant General Counsel for Microsoft’s Digital Crimes Unit, we gained deeper insights into the company’s strategy to combat such threats. Masada emphasized the importance of a multi-layered approach to security, combining advanced technology with rigorous legal action.

“We’re investing heavily in AI-driven threat detection systems,” Masada explained. “These systems are designed to identify and neutralize potential risks before they escalate.Additionally, we’re working closely with law enforcement agencies worldwide to dismantle criminal networks that exploit our platforms.”

Masada also highlighted the role of education in preventing future breaches.“We’re committed to raising awareness among developers about the dangers of embedding sensitive data in public repositories. Through workshops, online resources, and partnerships with industry leaders, we aim to foster a culture of security-first growth.”

When asked about the broader implications of this case, Masada noted, “This isn’t just about protecting Microsoft’s platforms. It’s about safeguarding the entire digital ecosystem. As AI becomes more integrated into our lives, the stakes are higher than ever. We must all work together to ensure these technologies are used responsibly.”

Microsoft’s efforts reflect a growing recognition of the need for collaboration between tech companies, governments, and the public to address the complex challenges posed by AI and cybersecurity.By taking a proactive stance, Microsoft aims to set a precedent for the industry, demonstrating that innovation and security can—and must—go hand in hand.

Microsoft Takes Legal Action Against Sophisticated AI Exploitation Scheme

In a groundbreaking move, Microsoft has filed a lawsuit against ten unidentified individuals accused of orchestrating a highly advanced cybercrime operation. The scheme, which exploited the company’s generative AI platforms, has raised significant concerns about the future of AI safety and cybersecurity.

The Scheme: A Pay-to-Use Platform for Harmful Content

According to Steven Masada, a key figure in Microsoft’s Digital Crimes Unit, the operation was far from ordinary. “This was a highly sophisticated scheme where the defendants developed specialized tools to bypass the safety measures we’ve implemented in our generative AI platforms,” Masada explained. These tools enabled the perpetrators to hack into legitimate customer accounts and combine these breaches with their own software to create a pay-to-use platform. Essentially, they monetized the ability to generate prohibited content, such as deepfakes, misinformation, and other harmful materials, by exploiting microsoft’s AI services.

Uncovering the Operation

Microsoft’s investigation began when its Digital crimes Unit detected unusual activity on its platforms. “Through advanced analytics and collaboration with cybersecurity experts, we identified patterns of abuse that pointed to this operation,” Masada said. Once sufficient evidence was gathered, the company launched a extensive inquiry, working closely with law enforcement agencies and leveraging its own forensic tools to trace the activity back to the defendants.

The Challenge of Unmasking the Defendants

One of the most significant hurdles in this case is the anonymity of the accused. The lawsuit targets ten individuals, all referred to as “John Doe” in court documents. masada highlighted the challenges: “Cybercriminals often operate under pseudonyms and use sophisticated methods to conceal their identities. in this case, the defendants are based outside the U.S., which adds another layer of complexity.” Despite these obstacles,Microsoft remains committed to unmasking the perpetrators and holding them accountable. “By filing this lawsuit, we’re sending a clear message that we will pursue those who exploit our platforms, regardless of where they are located,” Masada emphasized.

What Makes This Scheme Unique?

Microsoft has described the operation as one of the most sophisticated cybercrimes it has encountered. “What sets this apart is the combination of customary hacking techniques with the misuse of generative AI,” Masada noted. The defendants didn’t just breach accounts—they created a platform that allowed others to generate harmful content at scale. This dual approach required significant technical expertise and coordination, making it one of the more complex schemes Microsoft has seen.

Broader Implications for AI Safety

The case has far-reaching implications for the tech industry and the future of AI safety. “This case underscores the importance of building robust safety measures into AI platforms from the ground up,” Masada stated. As generative AI becomes more powerful, the potential for misuse grows. “We need a multi-layered approach that includes technological safeguards, legal action, and collaboration across the industry.Microsoft is committed to leading the way in responsible AI advancement, but this is a challenge that requires collective effort,” he added.

Conclusion

Microsoft’s legal action against this sophisticated AI exploitation scheme marks a pivotal moment in the fight against cybercrime. By addressing the misuse of generative AI and pursuing accountability, the company is setting a precedent for the tech industry. As Masada aptly put it, “This is a challenge that requires collective effort.” The case serves as a stark reminder of the importance of vigilance, innovation, and collaboration in safeguarding the future of AI.

Microsoft’s Stand Against Cybercrime: Insights from Steven Masada

In a world where cyber threats are becoming increasingly sophisticated, companies like Microsoft are taking a firm stand to protect their users. Steven Masada, a key figure at Microsoft, recently shared his thoughts on the company’s approach to combating cybercrime and the steps businesses and individuals can take to safeguard themselves.

A Clear Message to Cybercriminals

When asked about the message Microsoft hopes to send to potential bad actors, Masada was unequivocal. “Our message is clear: Microsoft will not tolerate the misuse of its platforms,” he stated. He emphasized that the company is committed to using every available tool—legal, technical, and collaborative—to protect its customers and the public from harm. “cybercriminals should know that we are watching, and we will take action,” he added.

Practical Advice for Businesses and Individuals

Masada also offered actionable advice for businesses and individuals looking to bolster their cybersecurity defenses. “Vigilance is key,” he said. For businesses, he recommended implementing robust cybersecurity measures, such as multi-factor authentication and regular system monitoring. Individuals, on the other hand, should exercise caution when sharing personal information online and report any suspicious activity promptly. “Together, we can create a safer digital habitat for everyone,” he concluded.

A Collaborative Effort for a Safer Digital World

The conversation underscored the importance of collaboration in the fight against cybercrime. Masada’s insights highlight the need for both technological solutions and user awareness to create a secure online environment. By staying informed and proactive, businesses and individuals can play a crucial role in mitigating cyber threats.

“Thank you, Mr. Masada, for your insights and for taking the time to speak with us today.”

“Thank you. It’s been a pleasure,” Masada replied.

This interview has been edited for clarity and length. For more updates on this case and other cybersecurity developments, stay tuned to our platform.

How can other tech giants learn from Microsoft’s response to this AI-related security incident to better prepare for and mitigate future threats?

Mpany is setting a precedent for how tech giants can respond to emerging threats in the digital age. This case highlights the dual-edged nature of AI technology—while it offers immense potential for innovation, it also presents new risks that must be managed with vigilance and collaboration.

As the examination unfolds, Microsoft’s efforts to enhance security, educate developers, and work with law enforcement will be critical in preventing similar exploits in the future. The company’s proactive stance underscores the importance of balancing technological advancement with robust safeguards,ensuring that AI continues to be a force for good in the world.

For businesses, developers, and users alike, this incident serves as a stark reminder of the need to prioritize cybersecurity and remain vigilant against evolving threats. As AI technology continues to evolve, so to must the strategies to protect it, ensuring a safer digital ecosystem for all.