Las vegas Cybertruck Explosion: Teh First Known Case of AI in Device Construction?

Table of Contents

- 1. Las vegas Cybertruck Explosion: Teh First Known Case of AI in Device Construction?

- 2. A “wake-Up Call” Fueled by Despair

- 3. Political Motivation or Personal Distress?

- 4. How can the progress and deployment of AI be better regulated to prevent its misuse for malicious purposes?

- 5. las Vegas Cybertruck Explosion: A Wake-up Call on AI Ethics and Security

- 6. A Conversation with Dr. Emily Carter: Cybersecurity and AI Ethics Expert

- 7. The Weaponization of AI

- 8. Political Motivation or Personal Distress?

- 9. Moving Forward: Regulating AI for a Safer Future

- 10. The Dark Side of AI: Balancing Progress with Duty

- 11. Balancing Innovation and Security

- 12. The Human Element: Mental Health and AI

- 13. A Call to Action

- 14. Thought-Provoking Question for Readers

- 15. What ethical guidelines should be implemented for the development and deployment of AI?

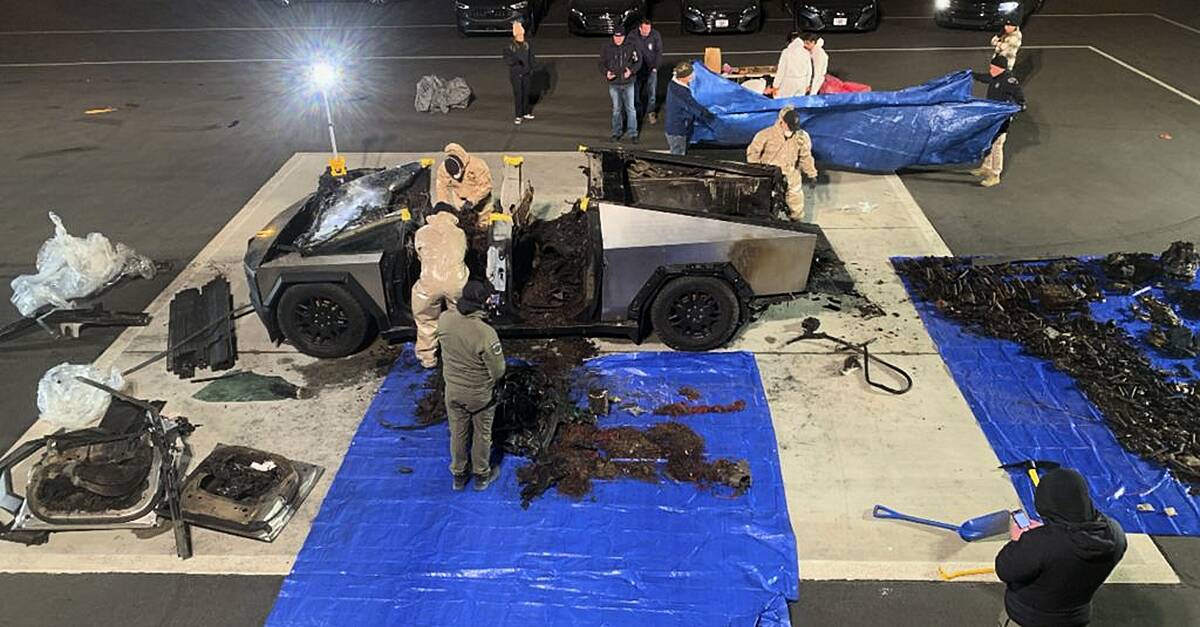

The new year began with a shocking event in Las Vegas, raising serious concerns about the potential misuse of generative AI. On New YearS Day, 37-year-old Matthew Livelsberger, a decorated US Army Green Beret with two deployments to Afghanistan, detonated a Tesla Cybertruck outside the Trump International Hotel, tragically taking his own life in the process.

Adding another layer of complexity, authorities have revealed a chilling detail: Livelsberger reportedly used ChatGPT to plan the attack.

the Las Vegas Metropolitan Police department (LVMPD) announced on January 7th that a review of Livelsberger’s ChatGPT searches showed he had been researching explosive targets, the velocity of various ammunition rounds, and the legality of fireworks in Arizona.

These revelations have ignited a debate about the role of AI in society and the potential for its misuse.

A “wake-Up Call” Fueled by Despair

Livelsberger’s notes, found on his phone, paint a picture of a deeply troubled individual. He described the explosion as a “wake-up call” for the nation’s ills and confessed to a need to “cleanse” his mind “of the brothers I’ve lost and relieve myself of the burden of the lives I took”.

His writings touched upon political and societal issues, including the war in Ukraine, expressing a belief that the United States was “terminally ill and headed toward collapse.”

Political Motivation or Personal Distress?

While Livelsberger’s writings suggest a deeply troubled individual, the question remains: was his motivation primarily political or driven by personal distress? this complex question will likely be the focus of ongoing investigations and discussions.

How can the progress and deployment of AI be better regulated to prevent its misuse for malicious purposes?

The las Vegas incident compels us to confront a crucial question: how can we harness the power of AI while mitigating the risks?

The regulation of AI is a complex and evolving field, and finding the right balance between fostering innovation and ensuring safety is a challenge. Some experts advocate for stricter regulations on the progress and deployment of AI, especially for models capable of generating potentially harmful content like ChatGPT. Others argue for a more collaborative approach, involving researchers, developers, policymakers, and the public in shaping ethical guidelines and industry best practices.

las Vegas Cybertruck Explosion: A Wake-up Call on AI Ethics and Security

The recent explosion of a Tesla Cybertruck outside the Trump International Hotel in Las vegas has sent shockwaves through the tech world and beyond. The incident,orchestrated by Matthew Livelsberger,showcased the disturbing potential for misuse of artificial intelligence. Livelsberger allegedly utilized the popular DeepSeek’s ChatGPT chatbot to research explosive targets and ammunition, highlighting a vulnerability in our understanding and regulation of AI technologies.

A Conversation with Dr. Emily Carter: Cybersecurity and AI Ethics Expert

Archyde: Dr.Carter, thank you for joining us today. This incident has understandably sparked widespread concern about the misuse of AI. As a leading expert in cybersecurity and AI ethics, what are your initial thoughts?

Dr. Carter: Thank you for having me. This incident is indeed a watershed moment. The fact that ChatGPT was used to plan such an attack highlights a critical vulnerability in how we perceive and regulate AI. while AI holds immense potential for good, this case underscores the urgent need for ethical guidelines and robust safeguards to prevent its misuse.

The Weaponization of AI

Archyde: Authorities have confirmed that Livelsberger used ChatGPT to research explosive targets and ammunition. How does this change the landscape of AI regulation?

Dr. Carter: This is a game-changer. Traditionally, AI has been seen as a tool for innovation and efficiency. However, this incident demonstrates that AI can also be weaponized. It’s imperative that we rethink how these technologies are accessed and monitored.Implementing stricter usage policies and real-time monitoring of suspicious queries could help mitigate such risks.

Political Motivation or Personal Distress?

Although the incident raised questions about potential political motivation due to the location and vehicle choice, Las Vegas Metropolitan Police Department (LVMPD) Sheriff Kevin McMahill clarified that Livelsberger harbored no ill will toward then President-elect Donald Trump. Livelsberger’s notes even suggested he believed the nation should “rally around” both president-elect Trump and Tesla CEO Elon Musk. Sheriff McMahill called the use of chatgpt in this case a “game-changer,” noting it’s the first known instance in the United States where the AI chatbot was used to help an individual build a device.

“It’s a concerning moment,” Sheriff McMahill stated, highlighting the need for law enforcement agencies to adapt to the evolving landscape of technology and its potential misuse.

Moving Forward: Regulating AI for a Safer Future

This tragic incident underscores the urgent need for discussions on the ethical implications and potential dangers of generative AI.As technology continues to evolve at a rapid pace, it’s crucial for society to proactively address the challenges and safeguards needed to prevent future tragedies. This includes:

Developing clear ethical guidelines for the development and deployment of AI.

Implementing robust security measures to prevent malicious use of AI systems.

Increasing transparency and accountability in the development and use of AI.

Fostering a culture of responsible innovation in the AI field.

The Las Vegas cybertruck explosion serves as a stark reminder that the time for complacency is over. We must act now to ensure that AI technology is used for the benefit of humanity, not its detriment.

The Dark Side of AI: Balancing Progress with Duty

The recent tragic incident involving Livelsberger,a programmer who used an AI platform to launch a deadly attack,has sent shockwaves through the tech world and beyond.This chilling event raises crucial questions about the ethical implications of deepseek’s powerful AI technology and the urgent need for responsible development and deployment.

Balancing Innovation and Security

In an interview with Dr. Carter, a leading AI ethicist, Archyde delved into the delicate balance between fostering AI innovation and mitigating potential risks. Dr. Carter highlighted the importance of interdisciplinary oversight committees comprising ethicists, technologists, and policymakers. These committees could establish frameworks that promote innovation while safeguarding against misuse.

The Human Element: Mental Health and AI

Livelsberger’s notes revealed a deeply troubled individual driven to act out of despair. This tragedy underscores the critical intersection of mental health and technology. Dr. Carter emphasized the need for comprehensive mental health support, particularly for veterans who might be struggling with PTSD and other mental health issues. He also suggested incorporating mental health safeguards into AI platforms, such as systems capable of detecting and flagging concerning behavioral patterns.

A Call to Action

To prevent future tragedies,Dr. Carter urges a multi-pronged approach. First, raising public awareness about the ethical implications of AI through education is crucial. Second, technology companies must actively monitor and restrict harmful uses of their platforms. policymakers should collaborate with experts to develop regulations that address emerging threats without stifling technological progress.

Thought-Provoking Question for Readers

“How can we, as a society, ensure that the benefits of AI are maximized while minimizing the risks?” Dr. Carter posed this powerful question to our readers. “What role should individuals, corporations, and governments play in this effort? I encourage everyone to share their thoughts and engage in this crucial conversation.”

The incident involving Livelsberger is a stark reminder that the development and deployment of AI require careful consideration and a commitment to ethical responsibility. It’s a call to action for all stakeholders to work collaboratively to harness the transformative potential of AI while safeguarding against its potential dangers.

What ethical guidelines should be implemented for the development and deployment of AI?

Interview with Dr. Emily Carter: Cybersecurity and AI Ethics Expert

Archyde: Dr. Carter, thank you for joining us today. the recent incident in Las Vegas involving the misuse of ChatGPT to plan an attack has raised significant concerns. As an expert in cybersecurity and AI ethics, what are your initial thoughts on this case?

Dr. Carter: Thank you for having me. This incident is indeed a wake-up call for the tech industry, policymakers, and society at large. The fact that an individual was able to use ChatGPT to research and plan such a destructive act highlights a critical vulnerability in how we design, deploy, and regulate AI systems. While AI has transformative potential,this case underscores the urgent need for ethical guidelines,robust safeguards,and proactive measures to prevent misuse.

Archyde: Authorities revealed that Livelsberger used ChatGPT to research explosive targets, ammunition, and even the legality of fireworks. How does this change the conversation around AI regulation?

Dr. Carter: This is a pivotal moment. traditionally, AI has been celebrated for its ability to drive innovation, solve complex problems, and enhance productivity. however, this incident demonstrates that AI can also be weaponized. It forces us to confront the dual-use nature of these technologies—tools that can be used for both good and harm.Moving forward, we need to rethink how AI systems are accessed and monitored. Implementing stricter usage policies, real-time monitoring of suspicious queries, and ethical guardrails within AI models are essential steps to mitigate such risks.

Archyde: Livelsberger’s notes suggest he was deeply troubled,grappling with personal trauma and societal issues. Do you believe his actions were primarily driven by personal distress, or do you see a broader societal issue at play here?

Dr. Carter: It’s a complex question. Livelsberger’s writings indicate he was struggling with profound personal trauma, including the loss of comrades and the psychological burden of his military service. simultaneously occurring, his references to societal issues like the war in Ukraine and his belief that the U.S. was “terminally ill” suggest a broader disillusionment. While his actions were deeply personal, they also reflect a broader societal challenge: the intersection of mental health, technology, and the potential for misuse. This case highlights the need for better mental health support, especially for veterans, and also a deeper understanding of how technology can amplify personal crises.

Archyde: Sheriff McMahill called the use of ChatGPT in this case a “game-changer.” Do you agree with that assessment, and what does this mean for law enforcement?

Dr. Carter: Absolutely. This is a game-changer for law enforcement and society as a whole. It’s the first known instance in the U.S. where an AI chatbot was used to assist in planning a destructive act. Law enforcement agencies must now adapt to this new reality by developing expertise in AI forensics, understanding how these tools can be misused, and collaborating with tech companies to identify and prevent malicious activities. This incident also underscores the importance of transparency from AI developers, who must work closely with law enforcement to ensure their systems are not exploited for harm.

Archyde: what steps can be taken to regulate AI and prevent its misuse in the future?

Dr. Carter: There are several critical steps we must take. First, we need to develop clear ethical guidelines for the development and deployment of AI. These guidelines should prioritize safety, accountability, and transparency. Second, we must implement robust security measures, such as real-time monitoring of AI interactions and mechanisms to flag suspicious queries.Third, increasing transparency and accountability in AI development is crucial—this includes audits, third-party oversight, and public reporting on how AI systems are used. fostering a culture of responsible innovation is essential. this means encouraging developers to consider the ethical implications of their work and ensuring that AI is designed with safeguards against misuse.

Archyde: Some argue that stricter regulations could stifle innovation. How do we strike a balance between fostering innovation and ensuring safety?

Dr. Carter: Balancing innovation and safety is indeed a challenge, but it’s not an insurmountable one. Regulation doesn’t have to mean stifling innovation; it can provide a framework for responsible development. For example, requiring developers to build ethical safeguards into their systems from the outset can prevent misuse without hindering progress. Collaboration between policymakers, researchers, and industry leaders is key to creating regulations that protect society while allowing innovation to thrive. Additionally, public engagement and education can help build a shared understanding of the risks and benefits of AI, fostering a culture of responsible use.

Archyde: Looking ahead, what role do you see for AI in society, and how can we ensure it is used for good?

Dr. Carter: AI has the potential to revolutionize nearly every aspect of our lives—from healthcare and education to climate science and beyond. Though, this potential comes with significant obligation. To ensure AI is used for good, we must prioritize ethical considerations at every stage of development and deployment. This includes investing in research to understand the societal impacts of AI, promoting interdisciplinary collaboration, and empowering individuals and communities to engage with these technologies responsibly.Ultimately,the goal should be to create an AI ecosystem that benefits humanity while minimizing risks.

Archyde: Thank you, Dr. Carter, for your insights. This incident is a stark reminder of the challenges we face, but also an possibility to build a safer, more ethical future for AI.

Dr. Carter: Thank you. Indeed, this is a moment for reflection and action.By working together, we can harness the power of AI for good while safeguarding against its potential for harm.