the Dawn of a New Computing Era: Extreme Parallel Computing

Table of Contents

- 1. the Dawn of a New Computing Era: Extreme Parallel Computing

- 2. Reimagining the Tech Stack for Extreme Parallel Computing

- 3. The Road Ahead: Opportunities and Challenges

- 4. The Evolution of Computing: Accelerating Intelligence Across the Stack

- 5. System-Level Software: The Backbone of Modern Computing

- 6. The Data Layer: From Historical Analytics to Real-Time Intelligence

- 7. Applications: Bridging the Physical and Digital Worlds

- 8. Semiconductor Stock Performance: A Five-Year Overview

- 9. The AI Semiconductor Race: Nvidia Leads, But contenders Are Rising

- 10. Nvidia: The AI Powerhouse

- 11. Broadcom: The Silent Contender

- 12. AMD: Challenging the Status Quo

- 13. Intel: A Struggling Giant

- 14. Qualcomm: Betting on the Edge

- 15. The market’s Recognition of Semiconductors

- 16. The Competitive Landscape: Nvidia and Its Challengers

- 17. AWS and Marvell: A New Era in AI hardware with Trainium and Inferentia

- 18. Why AWS’s Custom Silicon Strategy Matters

- 19. Key Takeaways

- 20. The Broader Implications for the AI Hardware Market

- 21. Looking Ahead

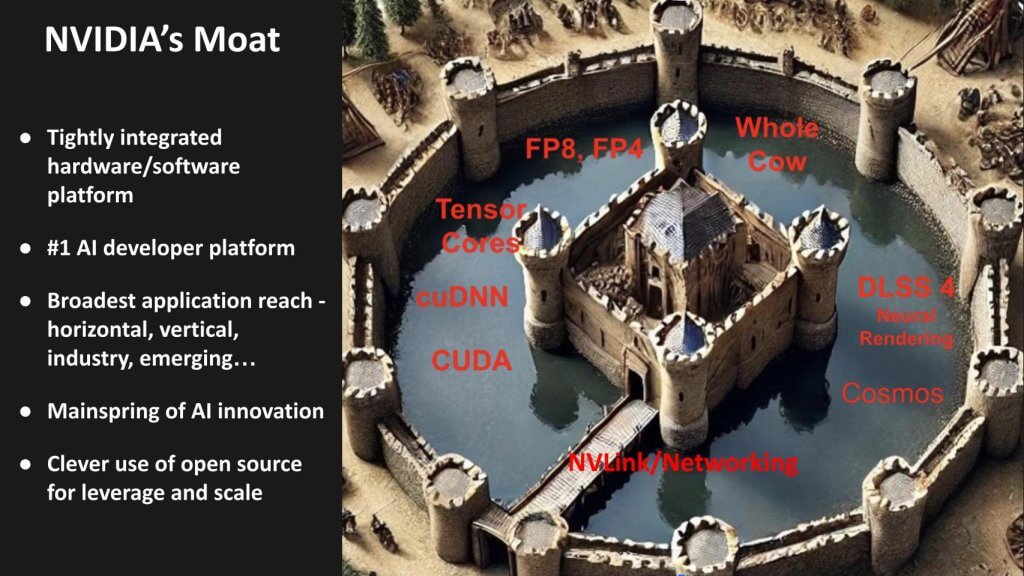

- 22. How NVIDIA is Building a $1.4 Trillion Market Through Innovation

- 23. The Hardware Edge: Advanced GPUs and Strategic Efficiency

- 24. Networking Dominance: The Mellanox Advantage

- 25. emerging Competition: A Fragmented Landscape

- 26. The Ecosystem Advantage: A Multifaceted Moat

- 27. Looking Ahead: Challenges and Opportunities

- 28. how Nvidia’s Software Ecosystem Builds an Unbreakable Competitive Edge

- 29. The Power of Integration: Hardware Meets software

- 30. Exploring Nvidia’s Software Stack

- 31. The Role of Partnerships in nvidia’s Success

- 32. Looking Ahead: Nvidia’s Future in a Competitive Landscape

- 33. Nvidia’s AI Ecosystem: A Deep Dive into Developer Tools and Emerging Trends

- 34. NIMS: Managing GPU Clusters at Scale

- 35. NeMo: Accelerating Natural Language Processing

- 36. Omniverse: Revolutionizing 3D Design and Simulation

- 37. Cosmos: Scaling AI Model Training

- 38. Developer Libraries and Toolkits: The Backbone of Innovation

- 39. AI PCs: The Next Frontier in Consumer Technology

- 40. Market Analysis: Data Center Spending and the Rise of EPC

- 41. The Future of Data Centers: Accelerated Computing and Nvidia’s Dominance

- 42. The Rise of Accelerated Computing

- 43. Nvidia’s Commanding Position

- 44. Driving Forces Behind the Growth

- 45. A Trillion-Dollar Market in the Making

- 46. Challenges and Risks

- 47. Conclusion

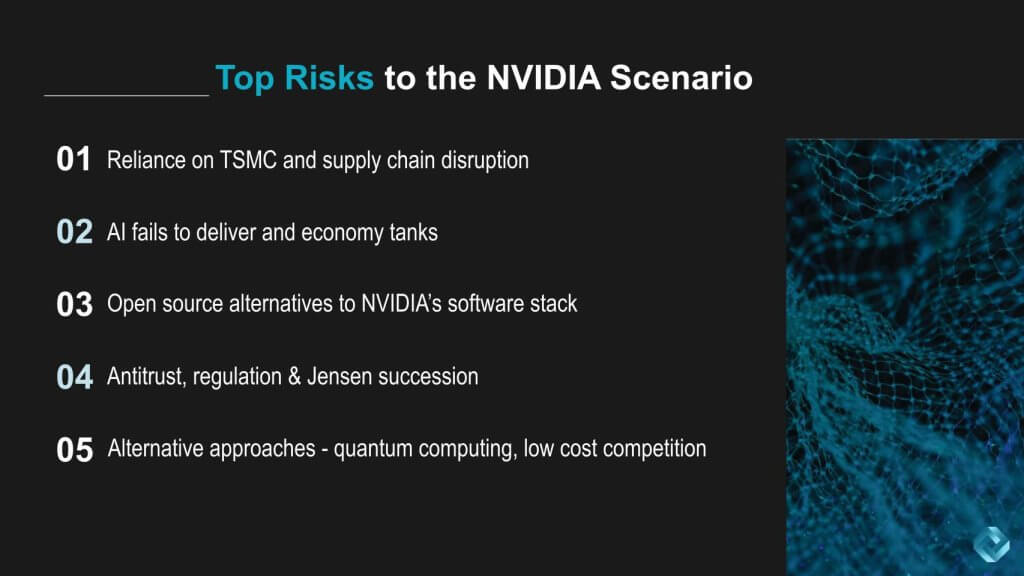

- 48. The Challenges Ahead for NVIDIA: Risks in a Booming Market

- 49. 1. Dependence on TSMC and Supply Chain Vulnerabilities

- 50. 2. The AI Hype Cycle and Economic Uncertainty

- 51. 3. The Rise of Open-Source Alternatives

- 52. 4. Regulatory Pressures and Leadership Succession

- 53. 5. Emerging Technologies and Market Disruption

- 54. Final Thoughts

- 55. Mastering Off-page SEO for WordPress: Strategies to Boost Your Rankings

- 56. What Is Off-Page SEO and Why Does It Matter?

- 57. Key Off-Page SEO Strategies for WordPress Websites

- 58. 1.build High-Quality Backlinks

- 59. 2. Leverage Social Media Engagement

- 60. 3. Collaborate with Industry Experts

- 61. 4.Participate in Online Communities

- 62. 5. Monitor Your Online Reputation

- 63. Why Off-Page SEO Is a Long-Term Investment

- 64. Final Thoughts

- 65. Here’s a PAA question related to your provided text:

- 66. 2. leverage Social Media engagement

- 67. 3. Build Relationships with Industry Leaders

- 68. 4. Optimize Local SEO

- 69. 5. Monitor and Analyze Your Efforts

- 70. Conclusion

We stand at the precipice of a monumental shift in the world of technology. Over the next decade, the data center industry, valued at over a trillion dollars, is set to undergo a radical transformation.This change is driven by what experts are calling extreme parallel computing (EPC), also known as accelerated computing. While artificial intelligence (AI) is the primary catalyst, the impact of this shift extends far beyond AI, influencing every layer of the technology stack.

At the forefront of this revolution is Nvidia Corp.,which has developed a extensive platform integrating hardware,software,systems engineering,and a vast ecosystem. Nvidia is positioned to lead this transformation for the next 10 to 20 years, but the forces reshaping the industry are far greater than any single company. This new paradigm is about rethinking computing from the ground up—from chips and data center infrastructure to distributed systems, software stacks, and edge robotics.

In this exploration, we’ll delve into how extreme parallel computing is reshaping the tech landscape, the competitive dynamics among semiconductor giants, and the challenges Nvidia faces. We’ll also examine the rise of “AI PCs” and how the data center market could balloon to $1.7 trillion by 2035. we’ll weigh the opportunities and risks that come with this transformative era.

Reimagining the Tech Stack for Extreme Parallel Computing

Every layer of the technology stack—compute, storage, networking, and software—is being re-engineered to support AI-driven workloads and extreme parallelism. The shift from general-purpose x86 CPUs to distributed clusters of GPUs and specialized accelerators is happening faster than many predicted. Here’s a closer look at how this transformation is unfolding across key areas:

Compute: The Rise of Specialized Accelerators

For decades, x86 architectures ruled the computing world. Today, however, general-purpose processors are being overshadowed by specialized accelerators, with GPUs leading the charge. AI workloads like large language models, natural language processing, and real-time analytics demand unprecedented levels of concurrency.

- Extreme Parallelism: Conventional multicore CPUs are hitting their limits, but GPUs, with thousands of cores, offer a solution. While GPUs may be more expensive upfront, their massively parallel design makes them far more cost-effective on a per-unit-of-compute basis.

- AI at Scale: Building systems for highly parallel processors requires advanced engineering. Large GPU clusters rely on high-bandwidth memory (HBM) and ultra-fast interconnects like InfiniBand or Ethernet. This combination of hardware and software is unlocking new possibilities for AI-driven applications.

Storage: the Unsung Hero of AI

While often overlooked, storage plays a critical role in AI. Data is the lifeblood of neural networks, and AI workloads demand high-performance storage solutions.

- Anticipatory Data staging: Next-gen storage systems predict which data will be needed by AI models, ensuring it’s pre-positioned near processors to minimize latency and overcome physical constraints.

- Distributed File and Object Stores: As AI models grow, so does the need for massive storage capacity.Petabyte-scale systems are becoming the standard,enabling seamless data access for large-scale AI operations.

Networking: The Backbone of Parallel Computing

High-speed networking is essential for connecting distributed systems and enabling real-time data processing.As AI workloads become more complex, the demand for faster and more reliable networks is skyrocketing.

- Low-Latency Interconnects: technologies like infiniband and ultra-fast Ethernet are critical for reducing communication delays between GPUs and other accelerators.

- Scalable Architectures: As data centers grow, so does the need for scalable networking solutions that can handle increasing traffic without compromising performance.

Software: The Glue That Holds It All Together

Software is the linchpin of extreme parallel computing, enabling seamless integration across hardware and systems. from AI frameworks to distributed computing platforms,software is driving innovation at every level.

- AI Frameworks: Tools like TensorFlow and PyTorch are essential for developing and deploying AI models at scale.

- Distributed Computing Platforms: Platforms like Kubernetes and Apache Spark are enabling organizations to manage complex workloads across distributed systems.

The Road Ahead: Opportunities and Challenges

the rise of extreme parallel computing presents immense opportunities, but it’s not without its challenges. As the industry evolves, companies must navigate technical, economic, and regulatory hurdles to fully realize the potential of this new era.

On the upside, the demand for AI-driven solutions is expected to fuel unprecedented growth in the data center market, potentially reaching $1.7 trillion by 2035. Though, this growth also brings risks, including increased competition, supply chain disruptions, and the need for critically important investment in infrastructure and talent.

As we look to the future, one thing is clear: extreme parallel computing is not just a technological shift—it’s a paradigm change that will redefine how we think about computing, data, and innovation.

The Evolution of Computing: Accelerating Intelligence Across the Stack

As the demands of accelerated computing continue to grow, the entire technology stack—from operating systems to application frameworks—must adapt.This transformation is not just about speed; it’s about rethinking how systems handle concurrency,data,and intelligence. At the heart of this shift are GPUs and other accelerators, which are now central to architectural design.

System-Level Software: The Backbone of Modern Computing

Operating systems, middleware, libraries, and compilers are undergoing rapid evolution to support ultra-parallel workloads. Modern applications, which frequently enough bridge real-time analytics and ancient data, require system-level software capable of managing unprecedented levels of concurrency. These GPU-aware OSes are essential for maximizing the potential of accelerators, ensuring that workloads are processed efficiently and effectively.

The Data Layer: From Historical Analytics to Real-Time Intelligence

Data is the lifeblood of AI, and the data stack is becoming increasingly intelligent. The shift from historical analytics to real-time engines is enabling organizations to create digital representations that include people, places, things, and processes. This transformation is supported by knowledge graphs, unified metadata repositories, and agent control frameworks, which harmonize data and ensure seamless integration across systems.

Applications: Bridging the Physical and Digital Worlds

Intelligent applications are emerging as powerful tools for unifying and harmonizing data.These applications not only have real-time access to business logic and process knowledge but are also evolving from single-agent to multi-agent systems. By learning from human reasoning traces, these systems are becoming increasingly adept at understanding human language and automating workflows. Moreover, the concept of digital twins is gaining traction across industries, offering real-time representations of businesses and processes.

Key takeaway: Extreme parallel computing represents a wholesale rethinking of the technology stack—compute,storage,networking,and especially the operating system layer. It places GPUs and other accelerators at the center of the architectural design.

Semiconductor Stock Performance: A Five-Year Overview

The AI Semiconductor Race: Nvidia Leads, But contenders Are Rising

The semiconductor industry is undergoing a seismic shift, driven by the explosive growth of artificial intelligence. At the forefront of this transformation is Nvidia, which has emerged as the undisputed leader in AI-driven computing. However, the race is far from over, with several key players vying for a piece of the lucrative AI chip market. Here’s a deep dive into the competitive landscape and what it means for the future of AI innovation.

Nvidia: The AI Powerhouse

Nvidia’s dominance in the AI space is nothing short of remarkable. The company’s GPUs have become the backbone of AI infrastructure,powering everything from data centers to autonomous vehicles. With a staggering 65% operating margin, Nvidia has not only captured the attention of investors but also set a high bar for competitors. Its ability to deliver cutting-edge hardware and software solutions has solidified its position as the most valuable public company in the world.

Broadcom: The Silent Contender

While Nvidia grabs headlines, Broadcom has quietly established itself as a formidable player in the AI semiconductor space. specializing in data center infrastructure, Broadcom provides critical intellectual property to tech giants like Google, Meta, and ByteDance. Its expertise in custom ASICs and next-generation networking makes it a strong contender in the AI race, notably for enterprises looking to optimize their data center operations.

AMD: Challenging the Status Quo

Advanced Micro Devices (AMD) has been making waves by outperforming Intel in the x86 market.However, with the x86 segment on the decline, AMD is doubling down on AI. The company is leveraging its successful x86 playbook to take on nvidia’s GPUs.While Nvidia’s competitive moat and robust software stack present significant challenges, AMD’s aggressive push into AI could shake up the market, especially if Nvidia stumbles.

Intel: A Struggling Giant

Intel, once the undisputed leader in semiconductors, is facing significant headwinds. its foundry strategy has struggled to gain traction, with insufficient volume to compete with Taiwan Semiconductor Manufacturing Corp. (TSMC). Many analysts beleive Intel will be forced to divest its foundry business this year, allowing it to focus on its design capabilities. This move could reignite innovation and position Intel as a viable player in the AI space.

Qualcomm: Betting on the Edge

Qualcomm remains a dominant force in mobile and edge computing, with a strong focus on device-centric AI. While it may not pose a direct threat to Nvidia in the data center, Qualcomm’s expansion into robotics and distributed edge AI could bring it into occasional competition with Nvidia. its expertise in power-efficient chips makes it a key player in the growing edge AI market.

The market’s Recognition of Semiconductors

The market has come to recognize that semiconductors are the foundation of future AI capabilities. Companies that can meet the growing demand for accelerated computing are being rewarded with premium valuations. This year,the “haves” — led by Nvidia,Broadcom,and AMD — are outperforming,while the “have-nots,” particularly Intel,are struggling to keep pace.

The Competitive Landscape: Nvidia and Its Challengers

Nvidia’s success has attracted a flood of competitors, both established players and new entrants. However, the sheer size of the AI market and Nvidia’s substantial lead mean that near-term competition is unlikely to dent its dominance. That said, each challenger brings a unique approach to the table, ensuring that the AI semiconductor race remains dynamic and unpredictable.

As the AI revolution continues to unfold, the semiconductor industry will remain at the heart of innovation. While Nvidia currently leads the pack, the rise of challengers like broadcom, AMD, and Qualcomm ensures that the competition will remain fierce, driving further advancements in AI technology.

Broadcom and Google: A Powerhouse Partnership

Broadcom and Google are shaping the future of AI hardware through their collaboration on custom chips, particularly Google’s Tensor Processing Units (TPUs). Broadcom’s cutting-edge intellectual property in serdes, optics, and networking complements Google’s TPU v4, which is widely regarded as a strong contender in the AI space. Together, they present a formidable option to Nvidia’s dominance.

- Future Market Potential: While Google’s TPUs are currently limited to internal use, there’s speculation that the tech giant might eventually expand their commercialization. however, for now, the ecosystem remains exclusive to Google’s internal applications, limiting broader market adoption.

Broadcom and Meta: Driving AI Innovation

Broadcom’s partnership with Meta is another critical piece of the AI puzzle. Both Google and Meta have demonstrated significant returns on investment in AI, particularly in consumer advertising. This success contrasts with the challenges many enterprises face in achieving similar results.

Both companies are championing Ethernet as the networking standard of choice, with Broadcom playing a pivotal role in the Ultra Ethernet Consortium. Broadcom’s expertise in networking across XPUs and clusters solidifies its position as a key player in AI silicon, second only to Nvidia.

AMD’s AI Ambitions: A Challenger in the Making

AMD is making bold strides in the AI space, leveraging its legacy in x86 architecture to develop competitive AI accelerators. While the company has a strong foothold in gaming and high-performance computing (HPC), its AI ambitions face a significant hurdle: Nvidia’s entrenched CUDA ecosystem.

- Dual Perspectives: Some industry experts believe AMD can carve out a meaningful share of the AI market,while others argue that matching Nvidia’s hardware,software,and developer ecosystem will be a steep uphill battle.

AMD’s recent acquisition of ZT Systems underscores its commitment to understanding end-to-end AI system requirements. This move positions AMD as a viable alternative for inference workloads, though it’s expected to capture only a single-digit share of the massive AI market.

AWS and Marvell: A New Era in AI hardware with Trainium and Inferentia

Amazon Web Services (AWS) has been quietly revolutionizing the enterprise tech landscape with its custom silicon strategy. Building on the success of its Graviton processors, AWS is now applying a similar approach to GPUs, partnering with Marvell to develop Trainium for AI training and Inferentia for inference workloads. This move marks a significant shift in the AI hardware market, offering a cost-effective alternative to Nvidia’s dominant position.

“Amazon,their whole thing at re:Invent,if you really talk to them when they announce Trainium 2 and our whole post about it and our analysis of it is supply chain-wise… you squint your eyes,This looks like a Amazon Basics TPU,right? It’s decent,right? But it’s really cheap,A; and B,it gives you the most HBM capacity per dollar and most HBM memory bandwidth per dollar of any chip on the market. And therefore it actually makes sense for certain applications to use. And so this is like a real shift. Like, hey, we maybe can’t design as well as Nvidia, but we can put more memory on the package.”

— Dylan Patel

Dylan patel’s analysis highlights AWS’s focus on delivering value through cost optimization. While nvidia remains the gold standard for performance and developer familiarity, AWS’s trainium and Inferentia chips are designed to cater to specific workloads where cost efficiency and memory bandwidth are critical. this strategy allows AWS to carve out a niche within its ecosystem, appealing to customers who prioritize affordability over cutting-edge performance.

Why AWS’s Custom Silicon Strategy Matters

AWS’s investment in custom silicon is one of the most underrated success stories in enterprise technology. The acquisition of Annapurna Labs laid the foundation for this strategy, enabling AWS to develop Graviton processors that have gained significant traction in the x86 market. Now, with Trainium and Inferentia, AWS is extending this approach to AI workloads, offering a compelling alternative to Nvidia’s GPUs.

While AWS may not match Nvidia’s design prowess, its ability to deliver high memory capacity and bandwidth at a lower cost makes Trainium and Inferentia attractive for certain applications. This is particularly relevant for businesses running large-scale AI models that require substantial memory resources without the premium price tag.

Key Takeaways

- Cost vs. Performance: AWS’s custom silicon provides a cost-effective solution for workloads that don’t require Nvidia’s premium capabilities. This could lead to a migration of certain AI tasks to AWS’s platform, especially in cost-sensitive environments.

- AWS’s Infrastructure Advantage: AWS has been building its own AI infrastructure for years, reducing its reliance on Nvidia’s full-stack solutions. By offering its own networking and software infrastructure, AWS can lower costs for customers while improving its margins.

The Broader Implications for the AI Hardware Market

AWS’s foray into custom AI hardware is part of a broader trend among tech giants to reduce dependence on third-party vendors. By developing in-house solutions, companies like AWS can better control costs, optimize performance, and tailor their offerings to specific customer needs. This shift is reshaping the competitive landscape, challenging Nvidia’s dominance and creating new opportunities for innovation.

While Nvidia will likely remain the preferred choice for complex,large-scale AI deployments,AWS’s Trainium and Inferentia chips offer a viable alternative for businesses seeking cost efficiency. This dual approach—leveraging both third-party and in-house solutions—positions AWS as a versatile player in the AI hardware market.

Looking Ahead

As AWS continues to refine its custom silicon strategy, the adoption of Trainium and Inferentia will be a key metric to watch.While it may not achieve the same level of penetration as graviton,the potential for significant adoption within AWS’s ecosystem is undeniable. For businesses exploring AI solutions, AWS’s offerings provide a compelling blend of affordability and performance, making them a strong contender in the evolving AI hardware landscape.

How NVIDIA is Building a $1.4 Trillion Market Through Innovation

Over the past two decades, NVIDIA has transformed itself from a graphics card manufacturer into a powerhouse driving the future of artificial intelligence, gaming, and high-performance computing. Its integrated ecosystem, combining cutting-edge hardware, software, and networking solutions, has positioned the company as a leader in a rapidly expanding market. here’s how NVIDIA is shaping a $1.4 trillion industry and maintaining its competitive edge.

The Hardware Edge: Advanced GPUs and Strategic Efficiency

At the core of NVIDIA’s success lies its ability to innovate in hardware. the company’s GPUs leverage state-of-the-art process nodes, integrating high-bandwidth memory (HBM) and specialized tensor cores that deliver unparalleled AI performance. What sets NVIDIA apart is its ability to release new GPU iterations every 12 to 18 months, staying ahead of competitors in a fast-evolving industry.

NVIDIA’s “whole cow” strategy ensures that every usable silicon die finds a purpose, whether in data centers, consumer GPUs, or automotive applications. This approach maximizes production efficiency, keeps yields high, and maintains healthy profit margins. By optimizing every aspect of its hardware, NVIDIA has built a robust foundation for its ecosystem.

Networking Dominance: The Mellanox Advantage

In 2019,NVIDIA’s acquisition of Mellanox Technologies marked a pivotal moment in its growth trajectory. this move gave NVIDIA control over InfiniBand, a high-speed networking technology critical for AI clusters and data centers. The integration of Mellanox’s ConnectX and BlueField products has enabled NVIDIA to offer end-to-end solutions, accelerating its time-to-market and solidifying its position as a one-stop shop for AI infrastructure.

this networking advantage allows NVIDIA to deliver comprehensive systems that seamlessly integrate gpus, networking hardware, and software, creating a cohesive ecosystem that competitors struggle to replicate.

emerging Competition: A Fragmented Landscape

While NVIDIA dominates the AI and GPU markets, emerging players are carving out niches of their own. Companies like Cerebras Systems, SambaNova Systems, Tenstorrent, and Graphcore are introducing specialized AI architectures designed to challenge NVIDIA’s supremacy. Meanwhile, China is investing heavily in developing homegrown GPU alternatives to reduce reliance on foreign technology.

However, these competitors face significant hurdles. Software compatibility, developer adoption, and the sheer scale of NVIDIA’s ecosystem make it tough for new entrants to gain traction. As one industry expert noted,“Though competition is strong,none of these players alone threatens Nvidia’s long-term dominance — unless Nvidia makes significant missteps. The market’s size is vast enough that multiple winners can thrive.”

The Ecosystem Advantage: A Multifaceted Moat

NVIDIA’s true strength lies in its ability to combine hardware, software, and networking into a unified ecosystem. Over nearly 20 years, the company has systematically built a platform that is both broad and deep, catering to industries ranging from gaming and automotive to healthcare and scientific research.

this ecosystem creates a formidable moat, making it challenging for competitors to replicate NVIDIA’s success. By continuously innovating and integrating its offerings, NVIDIA ensures that its customers remain locked into its ecosystem, driving long-term growth and market dominance.

Looking Ahead: Challenges and Opportunities

As NVIDIA continues to expand its reach, it faces challenges from both established players and emerging startups. Companies like Microsoft and Qualcomm are leveraging their strengths in software and mobile technology to compete in specific segments. However, NVIDIA’s ability to innovate and adapt ensures that it remains at the forefront of the industry.

The future of AI and high-performance computing is vast, and NVIDIA is well-positioned to capitalize on this growth. By maintaining its focus on hardware innovation, ecosystem integration, and strategic acquisitions, NVIDIA is not just shaping the market — it’s defining it.

Key takeaway: NVIDIA’s dominance is built on a foundation of relentless innovation,strategic acquisitions,and a deeply integrated ecosystem. While competition is intensifying, the company’s multifaceted moat ensures its position as a market leader for years to come.

how Nvidia’s Software Ecosystem Builds an Unbreakable Competitive Edge

Nvidia’s dominance in the tech industry isn’t just about its cutting-edge hardware. The company’s software ecosystem,built over decades,has created a competitive moat that’s nearly impractical to replicate. From its foundational CUDA platform to its expansive suite of developer tools, Nvidia has woven a tightly integrated system that keeps developers and enterprises locked into its ecosystem.

The Power of Integration: Hardware Meets software

At the heart of Nvidia’s success is its ability to seamlessly integrate hardware and software. While competitors focus on individual components, Nvidia’s strength lies in its holistic approach. Its software stack,anchored by CUDA,extends far beyond basic GPU programming,offering developers a comprehensive toolkit for AI,high-performance computing (HPC),and graphics.

Key takeaway: Nvidia’s advantage does not hinge on chips alone. Its integration of hardware and software — underpinned by a vast ecosystem — forms a fortress-like moat that is difficult to replicate.

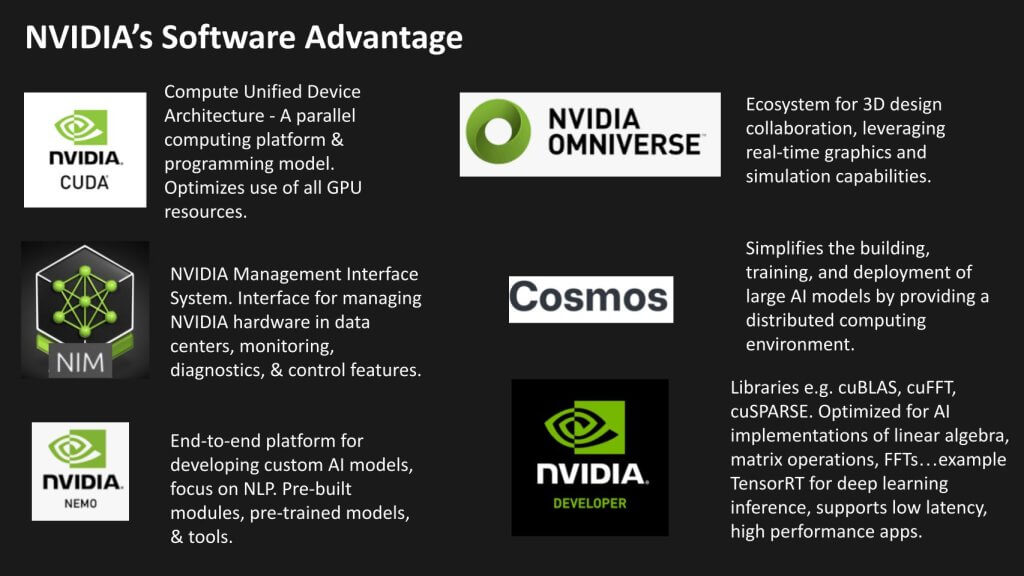

Exploring Nvidia’s Software Stack

Nvidia’s software ecosystem is vast, with tools and frameworks designed to meet the needs of developers across industries. Here’s a closer look at six critical layers of its software stack:

CUDA: The Foundation of Nvidia’s Ecosystem

Compute Unified Device Architecture (CUDA) is Nvidia’s flagship parallel computing platform. It simplifies GPU programming by abstracting hardware complexities, enabling developers to write applications in languages like C/C++, Fortran, and Python. CUDA optimizes workload scheduling, making it indispensable for AI, HPC, and graphics applications.

NIMS: Streamlining GPU Management

Nvidia Management Interface Systems (NIMS) provide tools for monitoring and managing GPU resources. this layer ensures efficient utilization of hardware, particularly in multi-GPU setups, which are critical for scaling AI and machine learning workloads.

Nemo and Omniverse: Expanding the Ecosystem

Nemo, Nvidia’s framework for conversational AI, and Omniverse, its platform for 3D simulation and collaboration, showcase the company’s ability to innovate beyond traditional GPU applications. These tools cater to niche markets, further solidifying Nvidia’s position as a one-stop solution for developers.

Developer Libraries and Toolkits

Nvidia’s extensive library of developer tools, including cuDNN, TensorRT, and NCCL, accelerates AI and machine learning workflows. These libraries are optimized for Nvidia hardware, ensuring peak performance and reducing development time.

The Role of Partnerships in nvidia’s Success

Nvidia’s ecosystem isn’t just built on technology; it’s also fueled by strategic partnerships. As Jensen Huang, Nvidia’s CEO, often emphasizes, the company’s network of alliances with major tech suppliers and cloud providers has been instrumental in its growth. These partnerships create a feedback loop, driving innovation and reinforcing Nvidia’s market position.

Looking Ahead: Nvidia’s Future in a Competitive Landscape

As the tech industry evolves, Nvidia continues to adapt. The rise of Ultra Ethernet and other networking standards may pose challenges, but Nvidia’s ability to optimize its stack for emerging technologies ensures its resilience.By maintaining its focus on integration and ecosystem development, Nvidia is well-positioned to remain a leader in AI, HPC, and beyond.

Key takeaway: Nvidia’s success lies in its ability to combine cutting-edge hardware with a robust software ecosystem,creating a competitive moat that’s nearly impossible to breach.

Nvidia’s AI Ecosystem: A Deep Dive into Developer Tools and Emerging Trends

nvidia has long been synonymous with cutting-edge GPU technology, but its true strength lies in its expansive software ecosystem. Beyond hardware, the company has built a robust suite of tools, libraries, and frameworks that empower developers to push the boundaries of AI, machine learning, and high-performance computing. Let’s explore the key components of Nvidia’s software stack and how they are shaping the future of AI.

NIMS: Managing GPU Clusters at Scale

At the infrastructure level, Nvidia’s NIMS (Nvidia Infrastructure Management System) plays a pivotal role in managing large-scale GPU clusters. Designed for enterprises running advanced AI workloads, NIMS handles monitoring, diagnostics, workload scheduling, and hardware health. while not a traditional developer tool, its importance cannot be overstated for organizations leveraging thousands of GPUs to power their AI initiatives.

NeMo: Accelerating Natural Language Processing

For developers working on natural language processing (NLP) and large language models, Nvidia’s NeMo framework is a game-changer. NeMo offers pre-built modules, pre-trained models, and tools for fine-tuning and exporting models. This end-to-end solution significantly reduces the time required to develop and deploy NLP applications, making it a favorite among businesses aiming to harness the power of language AI.

Omniverse: Revolutionizing 3D Design and Simulation

Originally designed for 3D design collaboration and real-time visualization, Nvidia’s Omniverse has evolved into a versatile platform for robotics, digital twins, and advanced physics-based simulations. By leveraging CUDA for graphical rendering, Omniverse combines real-time graphics with AI-driven simulation capabilities, enabling industries to create immersive, high-fidelity environments for design and testing.

Cosmos: Scaling AI Model Training

Nvidia’s Cosmos framework addresses the challenges of distributed computing for AI model training.By integrating with the company’s networking solutions and HPC frameworks, Cosmos simplifies the process of scaling compute resources horizontally.This allows researchers and developers to unify hardware resources, making large-scale AI training more efficient and accessible.

Developer Libraries and Toolkits: The Backbone of Innovation

Nvidia’s extensive library of specialized tools is a cornerstone of its ecosystem. From neural network operations and linear algebra to device drivers and image processing, these libraries are meticulously optimized for GPU acceleration. This level of tuning not only enhances performance but also fosters loyalty among developers who invest time mastering these tools.

Key takeaway: The software stack is arguably the most important factor in Nvidia’s sustained leadership. CUDA is only part of the story. The depth and maturity of Nvidia’s broader AI software suite forms a formidable barrier to entry for new challengers.

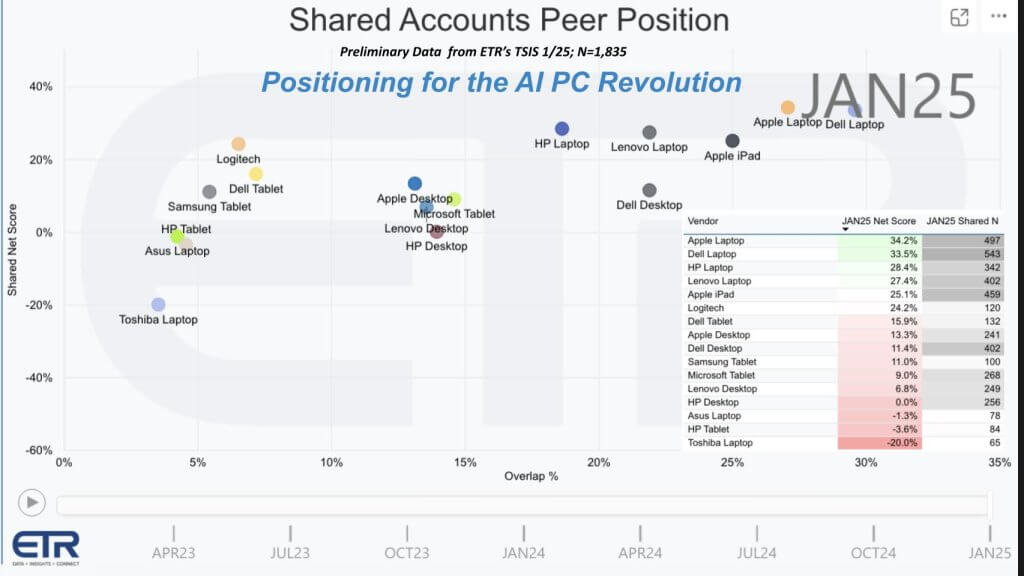

AI PCs: The Next Frontier in Consumer Technology

While much of Nvidia’s focus remains on data center innovation,the rise of AI PCs is a trend worth noting. At CES this year, several vendors unveiled laptops and desktops branded as “AI PCs,” equipped with neural processing units (NPUs) or specialized GPUs for on-device inference. These devices promise to bring AI capabilities directly to consumers, enabling faster and more efficient processing of AI-driven tasks.

ETR Data on Client Devices

As AI continues to permeate every aspect of technology, Nvidia’s software ecosystem remains a critical enabler of innovation. from data centers to consumer devices, the company’s tools and frameworks are driving the next wave of AI advancements, solidifying its position as a leader in the industry.

The data visualized above,sourced from a survey of approximately 1,835 IT decision-makers by ETR,highlights the spending momentum and market penetration of leading PC manufacturers. The vertical axis represents Net Score, a measure of spending momentum, while the horizontal axis indicates market overlap or penetration. Dell Technologies leads the pack with 543 accounts, showcasing strong spending momentum across major players like Apple, HP, and Lenovo. This trend underscores the robust demand for innovative computing solutions in the market.

- Dell Technologies Inc.: Dell has introduced AI-powered laptops and is collaborating with silicon partners such as AMD, Intel, and Qualcomm. There’s potential for Nvidia’s technology to be integrated into Dell’s future offerings.

- Apple: Apple has been a pioneer in integrating Neural Processing Units (NPUs) into its M-series chips for several years, enhancing battery life and enabling local AI inference. Its vertically integrated approach continues to set industry standards.

- others (HP Inc., Lenovo Group Ltd., etc.): These companies are actively testing and releasing AI-focused devices, often equipped with dedicated NPUs or discrete GPUs, signaling a shift toward smarter, more efficient computing.

The evolving Role of NPUs in PCs

While NPUs are a key component of AI PCs, their potential remains largely untapped due to underdeveloped software ecosystems.Though,as optimization improves,these processors are expected to unlock groundbreaking capabilities,such as real-time language translation,advanced image and video processing,enhanced security features,and localized large language model (LLM) inference. These advancements will redefine how users interact with their devices, making AI an integral part of everyday computing.

Nvidia’s Strategic position in AI PCs

Nvidia, renowned for its GPU expertise, is poised to deliver AI PC solutions that outperform traditional NPUs found in mobile and notebook devices. Despite challenges like power consumption, thermal management, and cost, Nvidia is leveraging salvaged “whole cow” dies to create laptop GPUs with optimized power envelopes. This innovative approach could position Nvidia as a key player in the AI PC market.

While the focus on AI PCs may seem tangential to Nvidia’s data center dominance,it plays a crucial role in driving developer adoption. On-device AI is particularly valuable for productivity tools, specialized workloads, and niche applications, fostering a broader transition to parallel computing architectures.

Market Analysis: Data Center Spending and the Rise of EPC

The survey data reveals a clear trend: data center spending is on the rise,with enterprises prioritizing advanced computing solutions. This shift is driven by the growing demand for AI and machine learning capabilities, which require robust infrastructure. As companies invest in next-generation technologies, the market for efficient, high-performance computing solutions is expected to expand significantly.

This trend aligns with the broader adoption of parallel computing architectures,which are essential for handling complex AI workloads. As the industry evolves, companies like Nvidia are well-positioned to capitalize on this momentum, driving innovation and shaping the future of computing.

The Future of Data Centers: Accelerated Computing and Nvidia’s Dominance

The data center landscape is undergoing a seismic shift, driven by the explosive growth of artificial intelligence (AI) and the demand for accelerated computing. By 2030, the data center market is poised to become a trillion-dollar industry, with advanced accelerators like GPUs and specialized chips taking center stage. Here’s a deep dive into the trends shaping this transformation and Nvidia’s pivotal role in this evolving ecosystem.

The Rise of Accelerated Computing

Accelerated computing, powered by GPUs and specialized hardware, is redefining how data centers operate.Traditional x86-based systems, once the backbone of enterprise IT, are being overshadowed by extreme parallel computing (EPC) solutions designed for AI training, inference, and high-performance analytics.

- In 2020, EPC accounted for just 8% of data center spending. By 2030, this figure is projected to exceed 50%, and by the mid-2030s, advanced accelerators could dominate 80% to 90% of silicon investments.

- The EPC market is growing at a staggering 23% compound annual growth rate (CAGR), far outpacing traditional systems.

Nvidia’s Commanding Position

Nvidia has emerged as a dominant force in the data center space, currently holding an estimated 25% market share. Despite fierce competition from hyperscalers, AMD, and other players, Nvidia is expected to maintain its leadership position, thanks to its tightly integrated hardware-software ecosystem and relentless innovation.

Key takeaway: The anticipated shift toward accelerated compute forms the foundation of our bullish stance on data center growth. We believe extreme parallel computing ushers in a multi-year (or even multi-decade) supercycle for data center infrastructure investments.

Driving Forces Behind the Growth

Several key trends are fueling the rapid expansion of the data center market:

- Generative AI and Large Language Models (llms): Innovations like ChatGPT have showcased the transformative potential of accelerated computing in natural language processing, coding, and search applications.

- Enterprise AI Integration: Businesses worldwide are embedding AI into their operations, driving demand for more powerful data center infrastructure.

- Robotics and Digital Twins: Industrial automation and advanced robotics are creating a need for large-scale simulations and real-time inference capabilities.

- Automation ROI: Companies are leveraging AI-driven automation to reduce costs and labor dependencies, yielding immediate returns on investment.

A Trillion-Dollar Market in the Making

The data center market is on track to surpass $1 trillion by 2032, with projections reaching $1.7 trillion by 2035. This growth is underpinned by a 15% CAGR, significantly higher than the single-digit growth rates historically seen in enterprise IT.

Challenges and Risks

While the outlook for Nvidia and the data center market is overwhelmingly positive, there are risks to consider. Intense competition, potential missteps in innovation, and the evolving strategies of hyperscalers could impact Nvidia’s dominance. However, the company’s robust ecosystem and first-mover advantage position it well to navigate these challenges.

Conclusion

The data center of the future will be a distributed, parallel processing powerhouse, with GPUs and specialized accelerators at its core. Nvidia’s leadership in this space is undeniable, but the market’s rapid expansion ensures opportunities for a diverse range of players, from hyperscalers to startups. As AI continues to drive demand for accelerated computing, the data center industry is set to enter a transformative supercycle, reshaping the technological landscape for decades to come.

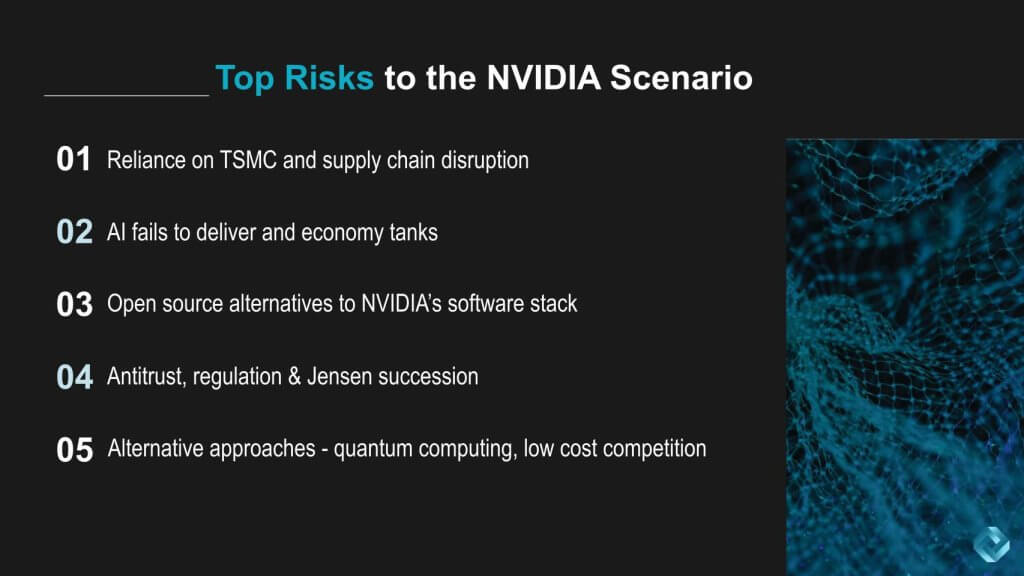

The Challenges Ahead for NVIDIA: Risks in a Booming Market

NVIDIA has cemented its position as a leader in the tech industry,particularly in the realms of AI and parallel computing. However, even as the company continues to thrive, several significant risks loom on the horizon. These challenges could potentially disrupt its trajectory if not addressed proactively.

1. Dependence on TSMC and Supply Chain Vulnerabilities

One of NVIDIA’s most critical vulnerabilities lies in its reliance on Taiwan Semiconductor Manufacturing Company (TSMC) for chip fabrication. The geopolitical tensions between China and Taiwan pose a substantial risk. Any disruption in this relationship could severely impact NVIDIA’s production capabilities, highlighting the fragility of its supply chain.

2. The AI Hype Cycle and Economic Uncertainty

While artificial intelligence has been a driving force behind NVIDIA’s success,there’s a growing concern that the technology may not deliver immediate returns as anticipated. Additionally, a potential economic downturn could lead to reduced spending on high-cost infrastructure, further complicating NVIDIA’s growth prospects.

3. The Rise of Open-Source Alternatives

Open-source frameworks are gaining traction, with numerous communities and vendors working to bypass NVIDIA’s proprietary software stack. If these alternatives mature sufficiently, they could erode NVIDIA’s dominance in developer mindshare, posing a long-term threat to its market position.

4. Regulatory Pressures and Leadership Succession

Governments worldwide are increasingly scrutinizing AI technologies, focusing on ethics and competition policies. Regulatory actions could limit NVIDIA’s ability to bundle hardware and software or pursue acquisitions. Moreover, the company’s leadership is heavily reliant on CEO Jensen Huang, whose strategic vision and industry influence are unparalleled. The lack of a disclosed succession plan adds an element of uncertainty to NVIDIA’s future.

5. Emerging Technologies and Market Disruption

Innovations such as quantum computing, optical computing, and ultra-low-cost AI chips could eventually challenge NVIDIA’s GPU dominance. If these technologies offer superior performance at lower costs and energy consumption, they might disrupt the current market dynamics.

Final Thoughts

Despite these challenges, NVIDIA’s future remains promising. The company’s commitment to relentless innovation in both hardware and software has been the cornerstone of its success. As the tech landscape evolves, NVIDIA must stay vigilant and adaptable to maintain its leadership in this era of extreme parallel computing.

Photo: NVIDIA

Disclaimer: All statements made regarding companies or securities are strictly beliefs, points of view, and opinions held by autonomous analysts and do not represent financial advice.

Mastering Off-page SEO for WordPress: Strategies to Boost Your Rankings

When it comes to optimizing your WordPress website for search engines,most people focus on on-page SEO—tweaking meta tags,improving site speed,and crafting keyword-rich content. But what about the actions you can take outside your website to improve its visibility? This is where off-page SEO comes into play, and it’s just as crucial for driving traffic, building authority, and climbing the search engine rankings.

What Is Off-Page SEO and Why Does It Matter?

off-page SEO refers to the strategies you implement beyond your website to enhance its credibility and relevance in the eyes of search engines. think of it as building a network of trust signals that tell Google and other search engines your site is a reliable source of facts. These signals include backlinks, social media engagement, and collaborations with other websites or influencers.

As Andy Jassy, CEO of Amazon.com, once said, “TheCUBE is an critically important partner to the industry. You guys really are a part of our events, and we really appreciate you coming, and I know people appreciate the content you create as well.” This sentiment underscores the value of building relationships and creating content that resonates with your audience—both key components of off-page SEO.

Key Off-Page SEO Strategies for WordPress Websites

Here are some actionable strategies to help you master off-page SEO and elevate your WordPress site’s performance:

1.build High-Quality Backlinks

Backlinks are one of the most powerful off-page SEO tools. When reputable websites link to your content, it signals to search engines that your site is trustworthy and authoritative. Focus on creating shareable, high-quality content that naturally attracts backlinks. Alex Reed blogging,collaborating with industry influencers,and participating in forums can also help you earn valuable links.

2. Leverage Social Media Engagement

Social media platforms are more than just spaces for sharing memes and cat videos. They’re powerful tools for driving traffic and building brand awareness. Share your content regularly,engage with your audience,and encourage them to share your posts. The more your content circulates, the more visibility your site gains.

3. Collaborate with Industry Experts

partnering with industry leaders and influencers can significantly boost your site’s credibility. Whether it’s through interviews, joint webinars, or co-authored articles, these collaborations can introduce your site to a broader audience and generate valuable backlinks.

4.Participate in Online Communities

Engaging in forums, Q&A sites, and online communities like Reddit or Quora can help you establish authority in your niche. Provide thoughtful answers, share your expertise, and link back to your site when relevant. This not only drives traffic but also builds trust with potential visitors.

5. Monitor Your Online Reputation

Your online reputation plays a significant role in off-page SEO. Encourage satisfied customers to leave positive reviews on platforms like Google My Business,Yelp,or industry-specific review sites. Address negative feedback promptly and professionally to maintain a positive image.

Why Off-Page SEO Is a Long-Term Investment

Unlike on-page SEO, which can yield relatively fast results, off-page SEO is a long-term strategy. It requires consistent effort, relationship-building, and a commitment to creating value for your audience. However,the rewards—higher rankings,increased traffic,and enhanced credibility—are well worth the investment.

Final Thoughts

Off-page SEO is an essential component of any comprehensive WordPress SEO strategy. By building high-quality backlinks, engaging on social media, collaborating with industry experts, and maintaining a strong online reputation, you can significantly boost your site’s visibility and authority. Remember, SEO is not a one-time task but an ongoing process. stay consistent, adapt to changes, and always prioritize providing value to your audience.

Ready to take your wordpress SEO to the next level? Start implementing these off-page SEO strategies today and watch your site climb the search engine rankings!

Here’s a PAA question related to your provided text:

Acklinks are one of the most critical factors in off-page SEO. They act as votes of confidence from other websites,signaling to search engines that your content is valuable and authoritative.To build high-quality backlinks:

- Create Shareable Content: Focus on producing in-depth, original, and engaging content that others naturally want to link to. This coudl include research studies, infographics, or extensive guides.

- Alex Reed Blogging: Contribute articles to reputable websites in your niche. This not only helps you gain backlinks but also establishes your authority in the industry.

- Outreach Campaigns: Reach out to bloggers, journalists, and influencers to share your content or collaborate on projects. Personalized outreach can led to valuable backlinks.

2. leverage Social Media engagement

Social media platforms are powerful tools for amplifying your content and driving traffic to your website. To maximize your social media impact:

- Share Content Regularly: Post your blog posts, infographics, and videos on platforms like LinkedIn, Twitter, and Facebook to increase visibility.

- Engage with Your Audience: Respond to comments, participate in discussions, and build relationships with followers to foster a loyal community.

- Collaborate with Influencers: Partner with influencers in your niche to promote your content and reach a broader audience.

3. Build Relationships with Industry Leaders

Networking with industry leaders and participating in relevant events can considerably boost your off-page SEO efforts. Consider:

- Attending Conferences and Webinars: Engage with thought leaders and share insights from these events on your website and social media.

- Collaborating on Projects: Work with other businesses or experts on joint ventures, such as co-authored articles or webinars, to gain exposure and backlinks.

- Joining Online Communities: Participate in forums, LinkedIn groups, and other online communities to share your expertise and build connections.

4. Optimize Local SEO

If your business has a physical presence, optimizing for local SEO is essential. This includes:

- Claiming Your Google My Business Listing: ensure your business information is accurate and up-to-date.

- Encouraging Customer Reviews: Positive reviews on platforms like Google, Yelp, and Facebook can improve your local search rankings.

- Building Local Citations: List your business in local directories and ensure consistency in your name, address, and phone number (NAP) across all platforms.

5. Monitor and Analyze Your Efforts

Tracking the effectiveness of your off-page SEO strategies is crucial for continuous improvement. Use tools like Google analytics,Ahrefs,or SEMrush to:

- Analyze Backlink Profiles: Identify which websites are linking to you and assess the quality of those links.

- Track Social Media Metrics: Measure engagement rates, shares, and traffic generated from social media platforms.

- Monitor Brand Mentions: Keep an eye on where and how your brand is being mentioned online to identify opportunities for further engagement.

Conclusion

Off-page SEO is a vital component of any comprehensive SEO strategy, especially for WordPress websites. By building high-quality backlinks, leveraging social media, networking with industry leaders, optimizing for local SEO, and continuously monitoring your efforts, you can significantly enhance your website’s authority and search engine rankings. Remember, off-page SEO is about building trust and relationships—both with your audience and search engines. Stay consistent, adaptable, and focused on delivering value, and your WordPress site will thrive in the competitive digital landscape.