artificial intelligence has transformed the landscape of autonomous systems, from self-driving cars to drones and robotics. Though, the computational demands of AI frequently enough pose significant challenges.Real-time data processing typically relies on cloud-based solutions, which introduce latency. In scenarios where every millisecond counts, this delay can undermine the effectiveness of even the most advanced AI systems.

Enter tinyML, a revolutionary approach that brings deep learning capabilities to low-power, edge devices. this innovation has unlocked new possibilities for real-time applications on hardware wiht limited resources. Yet, while tinyML has made significant strides in efficiency, adaptability remains a hurdle. Traditional methods involve large models trained on extensive datasets,which are impractical for edge devices constrained by memory,processing power,and energy limitations.

The Tapo IoT camera used in this study (📷: D. Vyas et al.)

To address these challenges, a team of researchers at VERSES has developed an agent-based system that combines on-device perception with active inference. This approach transcends conventional deep learning, enabling real-time planning in dynamic environments while maintaining a compact model size. The result is a smarter, more adaptable system that performs exceptionally well even on resource-constrained devices.

While deep learning has significantly enhanced sensing capabilities, scaling these models for edge devices has proven difficult. Active sensing—integrating perception and planning—remains a challenge.Active inference, grounded in probabilistic principles and physics, offers a promising solution. By modeling uncertainty and environmental dynamics, this paradigm allows AI systems to learn continuously and make adaptive decisions. Unlike cloud-dependent methods, it ensures low-latency responses and enhanced data privacy.

An NVIDIA Jetson Orin Nano Developer Kit (📷: NVIDIA)

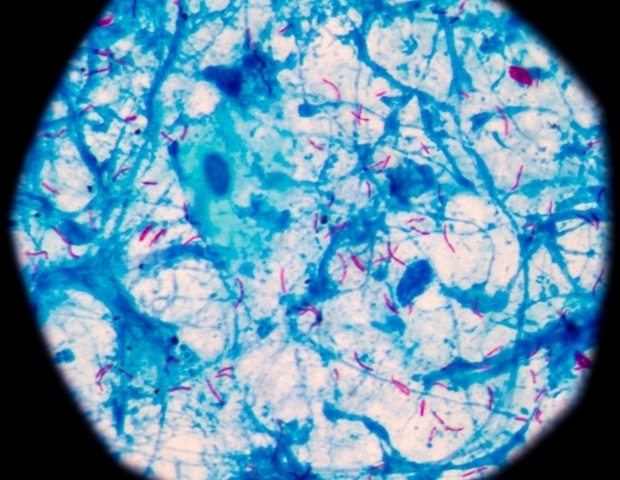

The researchers demonstrated their system using a “saccade agent” deployed on an IoT camera equipped with pan-and-tilt functionality, powered by an NVIDIA Jetson Orin Nano NX. This system seamlessly integrates object detection with active inference-based planning, allowing the camera to adapt dynamically to its surroundings.The agent strategically controls the camera, optimizing data collection in real-time.

Saccading,a process inspired by the human visual system,enables the camera to make rapid,precise movements to focus on areas of interest. This capability, combined with active inference, ensures that the system can efficiently gather critical details while minimizing resource usage. The result is a highly responsive and clever iot camera that operates effectively in dynamic environments.

How TinyML is Revolutionizing Edge Computing and AI

Table of Contents

- 1. How TinyML is Revolutionizing Edge Computing and AI

- 2. The Problem with Cloud-Based AI

- 3. Challenges in Developing TinyML Models

- 4. Real-World Applications of TinyML

- 5. The Future of TinyML

- 6. The Transformative Power of tinyml: Revolutionizing AI and Data Privacy

- 7. Predictive Maintenance: A Game-Changer for Industries

- 8. Enhancing data Security with Local Processing

- 9. The Future of TinyML: Democratizing AI

- 10. A Paradigm Shift in AI

- 11. How does TinyML address the latency issues associated with cloud-based AI?

- 12. The Problem with Cloud-Based AI

- 13. Challenges in Developing TinyML Models

- 14. Real-World Applications of TinyML

- 15. The Role of Active Inference in TinyML

- 16. The Future of TinyML

- 17. Final Thoughts

By Archyde News Editor, January 20, 2025

Artificial intelligence has undeniably transformed industries like autonomous vehicles, drones, and robotics. However, one significant challenge persists: the immense computational demands of AI often force reliance on cloud-based systems, which introduces latency. This delay can be detrimental in critical applications where timing is everything. Enter TinyML, a groundbreaking approach that brings deep learning directly to edge devices, enabling real-time decision-making without the need for constant cloud communication.

To delve deeper into this innovation, we spoke with Dr. emily Carter, a leading expert in TinyML and edge computing. Here’s what she had to say about the transformative potential of this technology.

The Problem with Cloud-Based AI

Editor: Dr. Carter, thank you for joining us today. Let’s start with the big picture. AI has revolutionized many industries, but cloud reliance introduces latency. How is TinyML addressing this issue?

Dr. Carter: “Absolutely. The issue with cloud-based AI is that it inherently creates a bottleneck. even with high-speed networks, sending data to the cloud for processing introduces delays. In applications like self-driving cars or medical devices, where milliseconds can make the difference between success and disaster, this latency is unacceptable. TinyML revolutionizes this by bringing deep learning directly to edge devices—small, low-power hardware that processes data locally. This eliminates the need for constant cloud communication,enabling real-time decision-making without compromising performance.”

Challenges in Developing TinyML Models

Editor: That’s fascinating. But developing machine learning models for edge devices must come with its own set of challenges. What are the major hurdles TinyML faces?

Dr. Carter: “One of the biggest challenges is adaptability.Conventional AI models are often large and trained on massive datasets, making them resource-intensive. Edge devices, on the other hand, have limited memory, computational power, and energy. TinyML requires us to rethink how we design these models—optimizing them for efficiency without sacrificing accuracy. Techniques like model pruning, quantization, and transfer learning have been game-changers, but there’s still work to be done. Another challenge is ensuring these models can generalize well across different environments and use cases, which is critical for scalability.”

Real-World Applications of TinyML

Editor: Are there any real-world applications where tinyml is already making a significant impact?

Dr. Carter: “Definitely.Take, for example, wearable health devices. TinyML allows these devices to monitor vital signs like heart rate or blood oxygen levels in real time and detect anomalies without needing to send data to the cloud. This is not only faster but also addresses privacy concerns. In agriculture, TinyML-powered sensors can analyze soil conditions or predict crop diseases, enabling farmers to make data-driven decisions on the spot. Even in industrial settings, this technology is proving invaluable.”

The Future of TinyML

TinyML is more than just a technological advancement; it’s a paradigm shift in how we think about AI and computing. By moving intelligence to the edge, it opens up possibilities for faster, more efficient, and privacy-conscious applications across industries. As Dr. Carter aptly puts it, “This research underscores the potential of edge-based adaptive systems in applications that demand both efficiency and accuracy. From aerial search-and-rescue missions to sports event tracking and smart city surveillance, the possibilities are vast.”

With experts like Dr. Carter leading the charge, TinyML is poised to redefine the future of AI, making it more accessible, efficient, and impactful than ever before.

The Transformative Power of tinyml: Revolutionizing AI and Data Privacy

In the ever-evolving world of technology,TinyML is emerging as a groundbreaking innovation. This cutting-edge approach to machine learning is not only reshaping industries but also addressing critical concerns like data privacy and accessibility.From predictive maintenance to healthcare, TinyML is proving to be a game-changer.

Predictive Maintenance: A Game-Changer for Industries

One of the most exciting applications of tinyml is in predictive maintenance. By embedding sensors in machinery, potential issues can be detected long before they escalate into costly failures. This proactive approach minimizes downtime and optimizes operational efficiency, making it a valuable tool for industries ranging from manufacturing to transportation.

Enhancing data Security with Local Processing

In an era where data privacy is a top priority, TinyML offers a unique solution. unlike traditional systems that rely on cloud-based processing, TinyML handles data locally on the device itself. This significantly reduces the risk of data breaches or unauthorized access. Dr. Carter, a leading expert in the field, highlights this advantage: “TinyML is a game-changer for privacy as it processes data locally. Instead of sending sensitive data to the cloud, everything is handled on the device itself.”

This approach is especially impactful in sectors like healthcare, where patient data can remain securely stored on wearable devices. “In healthcare, patient data can stay on the wearable device, ensuring confidentiality while still providing actionable insights,” Dr. Carter explains. This local processing model aligns seamlessly with increasing regulatory requirements and consumer demands for data protection.

The Future of TinyML: Democratizing AI

As hardware technology advances—think more powerful microcontrollers and energy-efficient chips—the potential for TinyML continues to grow. dr. Carter is especially keen about its role in democratizing AI: “The potential is immense. as hardware continues to improve, we’ll see TinyML integrated into even more applications. I’m especially excited about its role in democratizing AI.”

By enabling sophisticated machine learning models to run on affordable, low-power devices, TinyML is making AI accessible to underserved communities and industries. Dr. Carter envisions a future where “smart farming solutions for smallholder farmers or AI-powered diagnostic tools in remote clinics” become a reality. “The possibilities are endless,” he adds.

A Paradigm Shift in AI

TinyML is more than just a technological advancement; it represents a fundamental shift in how we approach artificial intelligence.As Dr. Carter puts it,“It’s an exciting time to be in this field,and I’m looking forward to seeing how TinyML continues to evolve and transform industries.” This innovation is not only expanding the horizons of AI but also addressing some of the most pressing challenges of our time,from data security to equitable access to technology.

How does TinyML address the latency issues associated with cloud-based AI?

Interview with Dr. Emily Carter: The TinyML Revolution in Edge computing

By Archyde News Editor

January 20, 2025

Artificial intelligence has undeniably transformed industries like autonomous vehicles, drones, and robotics. However, one important challenge persists: the immense computational demands of AI often force reliance on cloud-based systems, wich introduces latency. This delay can be detrimental in critical applications where timing is everything. Enter TinyML, a groundbreaking approach that brings deep learning directly to edge devices, enabling real-time decision-making without the need for constant cloud dialog.

To delve deeper into this innovation, we spoke with Dr. Emily Carter, a leading expert in TinyML and edge computing. Here’s what she had to say about the transformative potential of this technology.

The Problem with Cloud-Based AI

Editor: Dr. Carter, thank you for joining us today. Let’s start with the big picture. AI has revolutionized many industries,but cloud reliance introduces latency.How is TinyML addressing this issue?

Dr. Carter: “Absolutely. The issue with cloud-based AI is that it inherently creates a bottleneck. Even with high-speed networks, sending data to the cloud for processing introduces delays. In applications like self-driving cars or medical devices, where milliseconds can make the difference between success and disaster, this latency is unacceptable. TinyML revolutionizes this by bringing deep learning directly to edge devices—small,low-power hardware that processes data locally. This eliminates the need for constant cloud communication, enabling real-time decision-making without compromising performance.”

Challenges in Developing TinyML Models

Editor: that’s interesting. But developing machine learning models for edge devices must come with its own set of challenges. What are the major hurdles TinyML faces?

Dr. Carter: “One of the biggest challenges is adaptability. Conventional AI models are frequently enough large and trained on massive datasets, making them resource-intensive. Edge devices, on the other hand, have limited memory, computational power, and energy. TinyML requires us to rethink how we design these models—optimizing them for efficiency without sacrificing accuracy. Techniques like model pruning, quantization, and transfer learning have been game-changers, but there’s still work to be done. Another challenge is ensuring these models can generalize well across different environments and use cases, which is critical for scalability.”

Real-World Applications of TinyML

Editor: Are there any real-world applications where TinyML is already making a significant impact?

Dr. Carter: “Definitely. Take, such as, wearable health devices. TinyML allows these devices to monitor vital signs like heart rate or blood oxygen levels in real time and detect anomalies without needing to send data to the cloud. This is not only faster but also addresses privacy concerns. In agriculture, TinyML-powered sensors can analyse soil conditions or predict crop diseases, enabling farmers to make data-driven decisions on the spot. Even in industrial settings, this technology is proving invaluable. As a notable example, IoT cameras equipped with TinyML can dynamically adapt to their surroundings, optimizing data collection and reducing resource usage.”

The Role of Active Inference in TinyML

Editor: You’ve been part of groundbreaking research combining active inference with TinyML. Can you explain how this approach is different from customary deep learning?

Dr. Carter: “Certainly. Traditional deep learning relies on large, pre-trained models that can be inflexible and resource-intensive. Active inference, on the other hand, is a probabilistic approach that integrates perception and planning in real-time. It allows AI systems to model uncertainty and environmental dynamics, making them more adaptable and efficient.For example, in our research, we used a ‘saccade agent’ on an IoT camera to mimic the human visual system’s rapid movements, enabling it to focus on areas of interest dynamically. This approach ensures low latency and enhances data privacy, as decisions are made on-device without relying on the cloud.”

The Future of TinyML

Editor: Where do you see TinyML heading in the next five to ten years?

Dr. carter: “The potential is immense. As hardware continues to improve and algorithms become more efficient,TinyML will become even more accessible and impactful.I foresee it being integrated into smart cities, where it can optimize traffic flow, monitor air quality, and enhance public safety. It will also play a critical role in disaster response, enabling real-time data analysis in remote or resource-constrained areas. Ultimately, TinyML is more than just a technological advancement; it’s a paradigm shift in how we think about AI and computing. By moving intelligence to the edge,we’re creating a future where AI is faster,more efficient,and more privacy-conscious.”

Final Thoughts

tinyml is more than just a technological advancement; it’s a paradigm shift in how we think about AI and computing. By moving intelligence to the edge, it opens up possibilities for faster, more efficient, and privacy-conscious applications across industries.As Dr. Carter aptly puts it, “this research underscores the potential of edge-based adaptive systems in applications that demand both efficiency and accuracy. From aerial search-and-rescue missions to sports event tracking and smart city surveillance, the possibilities are vast.”

With experts like Dr. Carter leading the charge, TinyML is poised to redefine the future of AI, making it more accessible, efficient, and impactful than ever before.