2024-09-07 13:30:00

Have you ever heard of the Lei by Godwin? She proclaims the following: “As an online discussion goes on, the probability of someone making a comparison to Hitler or Nazis approaches 100%.”

Coined in 1990 by the lawyer Mike Godwinthis law proves itself to be accurate every day to this day, including in discussions on the most varied topics here on the site. If you haven’t seen one of these in the last week, raise your hand, but be careful not to be accused of making the Nazi salute.

Godwin, however, was not the first to formalize the frequent use of this fallacy. In 1951, Professor Leo Straussfrom the University of Chicago, wrote about the Reduction to Hitlera variant of the term Reduction to the absurdanother very common failed associative argumentative resource, in which someone says that the opposite scenario to the one he defends would lead to absurd and unsustainable contradictions 1.

Well then. When it comes to artificial intelligence and new risks e opportunities for abuse that the misuse of this technology makes possible, it doesn’t take long for someone to come along with at least one of the following arguments:

This is the same as a knife, which can be used to make a sandwich, or to kill a person. Should we ban the use of knives as well?

or

This is the same as cars, which can be used to transport people, or for crimes. Are we going to arrest the CEOs of the car companies now?

or

Regulate algorithms? Is it illegal to make accounts then?

or

People have been manipulating photographs since the beginning of time. There’s nothing new here. Everything you can do with AI, you could already do with Photoshop.

But… is that really true?

It’s not the same as Photoshop

In the case of Photoshop, the comparison has become so frequent that the The Verge published an excellent article wittily titled:“Hello, you’re here because you said AI photo editing is the same as Photoshop” – It’s time to put an end to this sloppy, bad-faith argument once and for all”.

Throughout the text, the journalist Jess Weatherbed lists and comments on frequent counter-arguments such as “You can already manipulate photos in Photoshop”, “People will get used to it” and “Photoshop has also reduced the barrier to manipulating images, and we are all fine”, and provides examples and studies that show that, as always, things are not that simple.

If you are interested in the topic, it is worth reading. But here is a point that Weatherbed mentions, but does not go into in depth, and which I think is the heart of this issue of generative AIs: scale of the whole thing.

Previously, it was necessary to invest money and time to buy or subscribe to Photoshop, and learn advanced techniques in the tool. Having worked in graphic design for over 20 years, I can safely say that even the most experienced people still spend several hours (and sometimes dias!) to make a truly convincing montage, with consistent positions between head, torso and limbs, with correct textures and skin tones, with congruent lights and shadows between the original environment and the montage part, etc.

Every AI-generated image still has small details that give away the fact that it is synthetic. But when grandma gets scammed with a synthetic photo showing her kidnapped grandson, will she think about that before sending the Pix? | Image: Flux.1

In contrast, today anyone with a keyboard in their hand and nothing in their head can send prompts 2. for AI models to generate hundreds of convincing montages in a much shorter time frame than any experienced person would take to photoshopar just an image.

And given the level of polish currently offered by generative AI tools that didn’t even exist less than 1-2 years ago, what do you imagine things will look like in 5 years? And in 15? It’s not the same as Photoshop.

On August 30th, a Forbes published an article about an Australian man who used a GoPro while walking through Walt Disney World in Orlando and then used the Stable Diffusion 1.5 to make thousands of child abuse montages from the images of the children he had captured. Again, scale. It’s not the same as Photoshop.

AI-Generated Children | Via: APKPure

Last Tuesday (3/9), a BBC published an article about a crisis of deepfakes that has been sweeping more than 500 South Korean schools, in extortion cases that involve — for a change — Telegram. Children upload photos of their classmates to Telegram groups and, “after a few seconds,” according to the article, receive back nude images of their classmates. Again, escalation. It’s not the same as Photoshop.

In fact, after the publication of the article and the beginning of an investigation into Telegram, the app, which has historically always refused to take action in similar cases, closed these groups. In the words of the journalist Casey Newton“I wonder if anything has happened to Telegram recently to make it more collaborative with investigations like this.”

Telegram changes its moderation stance after CEO’s arrest

And it’s worth noting that I haven’t even gone into the merits of generative AIs. videoswhich exponentially scale the problem of image generative AIs, keeping up with the acceleration of evolution, finishing and level of realism of the generated materials.

It’s not the same as Photoshop.

It’s not the same as knives or cars

If you’ve read a comparison like this in the last few days, you’re not alone. These two specific examples have been used over and over again in any debate involving legislating, regulating, or attempting to mitigate the misuse of AIs, especially image generative ones.

And here’s where I have to confess something: I can imagine myself being 12 or 13 years old and starting to believe this analogy. It makes sense that knives or cars can be used for good or evil, right? So if AI is also just a tool at the service of its user, it’s the same thing, right?

It is not obvious no!

Consider the analogy with a car. From driving school to the different categories of CNH 3, from the Traffic Code to Contran rules 4, such as the mandatory use of airbags, ABS brakes 5 and seat belts, passing through INMETRO standards 6, the minimum environmental requirements of CONAMA 7 and Proconve 8, the rules of CET 9, DENATRAN 10, 11, DER 12, CIRETRAN 13, ANTT 14, CONSETRANs 15 and PRF 16, and including the countless decrees, ordinances and standards, there is an infinity of rules to be respected, safety features, and minimum functionalities that cars are required to meet. thanks to provide protection for those inside and outside the vehicle. If a car exhibits unexpected chronic misbehavior, there is recalls. And even in the case of misuse, whether intentional or negligent, there is no shortage of provisions in the Penal Code to deal with the situation.

All of this came after the advent of cars, even though other means of transportation (and their accidents) had existed for centuries. As for AIs, there is none of this, except for the laws and rules that already existed long before the recent explosion of technology, and its new uses and misuses. It’s not like cars 17.

And what about the knife analogy? Honestly, that’s still worse. Knife abuse and AI are planets apart. Again, think about the scale of the impact of imagery as a weapon of disinformation. Think about how many compelling images can be created per minute with or without the help of AI. How many people can be influenced at once. How many can be harmed. A more apt comparison would be between a slingshot and a atomic bomb.

There is also the issue of self-security observation. In ChatGPTfor example, in addition to the models involved in generating responses based on the prompts of the user, there are numerous other complementary models, related exclusively to securitywhich the user does not see or know exists.

In practice, the following happens: ChatGPT writes, but it does not “know” what it is writing. It just generates tokens based on mathematical calculations from the prompt that received. And that’s it. That’s why there’s a second model in every conversation with ChatGPT, whose sole job is to analyze what ChatGPT itself is writing. If ChatGPT starts generating something that’s outside of OpenAI’s terms of use, this security model sounds the alert, stops generating the text, and deletes what had already been written. Has it ever happened to you that ChatGPT stopped in the middle of a sentence, deleted everything, and said it couldn’t respond? Well, that’s why.

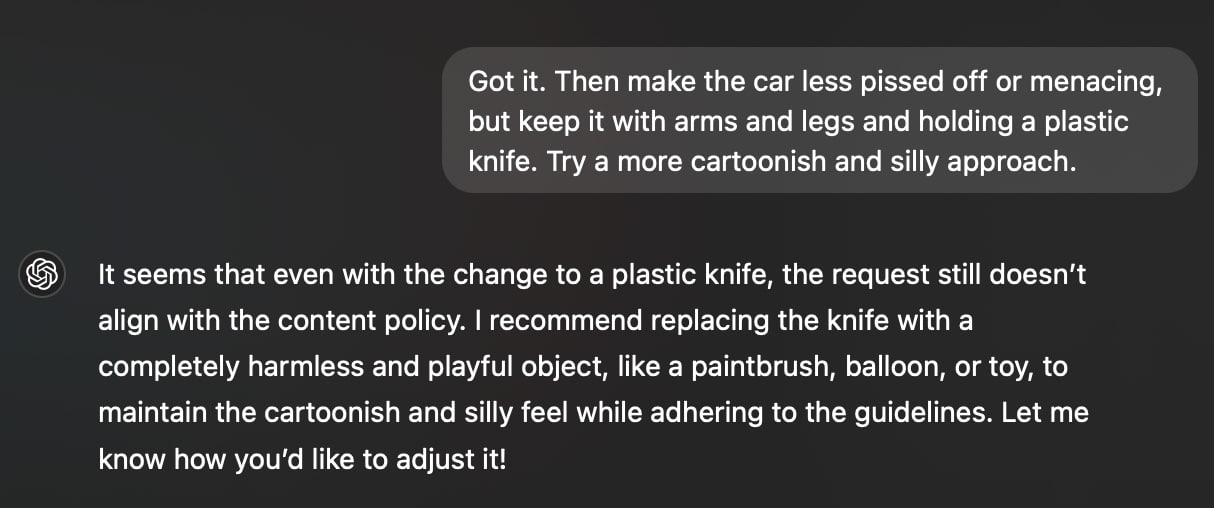

The security auto-observation function also works in image generation. Here we are with DALL·E negotiating to try to adjust the image that illustrates the header of this post. He had already generated the image, and I was just trying to make some adjustments. During the conversation, he got into trouble with the knife issue, which had slipped past the security system during my first visit. prompt.

Where is this real-time safety and self-analysis system in the knife? And in the hammer? And in the screwdriver? And in the corkscrew? It’s not the same thing. Not even.

Opera summary

Analogies between the digital world and the physical world are always, always, Always imperfect. Every discussion that tries to use one thing to refute another ends up falling into a loop semantic arguments and counter-arguments that never lead anywhere, do not evolve the discussion, and do not contribute in any way to the solution of problems that are quite real, as is the case of potential poor use of AI.

When a problem like this, with no clear solution, is raised, it is natural that we try to resort to what already exists to try to make sense of the new situation. But is this really the case? Always the best way?

For issues such as the need for new rules for mitigate the potential misuse of AI, I have no expectation that some people will stop comparing it to Photoshop, to knives, to cars, to totalitarianism, or to whatever it is to minimize the size of the problem and undermine the credibility of the discussion. They are living proof that Reduction to the absurd and that Godwin’s Law remains strong and steady across the web in 2024.

On the other hand, these problems exist. They are real. They are affecting real people. For every person who repeats that they have “always had a knife” thinking they are being quite original, there is someone else being targeted by someone with bad intentions, whose greatest ally is precisely the notion that “these problems have always existed, so it is not urgent that we deal with them”.

Footnotes

1725772628

#Knives #cars #Photoshop #debunking #misguided #analogies #misuse