2024-05-28 08:49:26

In June 2022, the Canadian unicorn Context launched “Cohere For AI”, a non-profit analysis lab and neighborhood devoted to contributing to elementary analysis in open supply machine studying. Cohere For AI just lately unveiled Aya 23, a brand new household of huge multilingual language fashions. The weights of the 2 variations of Aya 23, which depend 8 billion and 35 billion parameters, can be found at Hugging face.

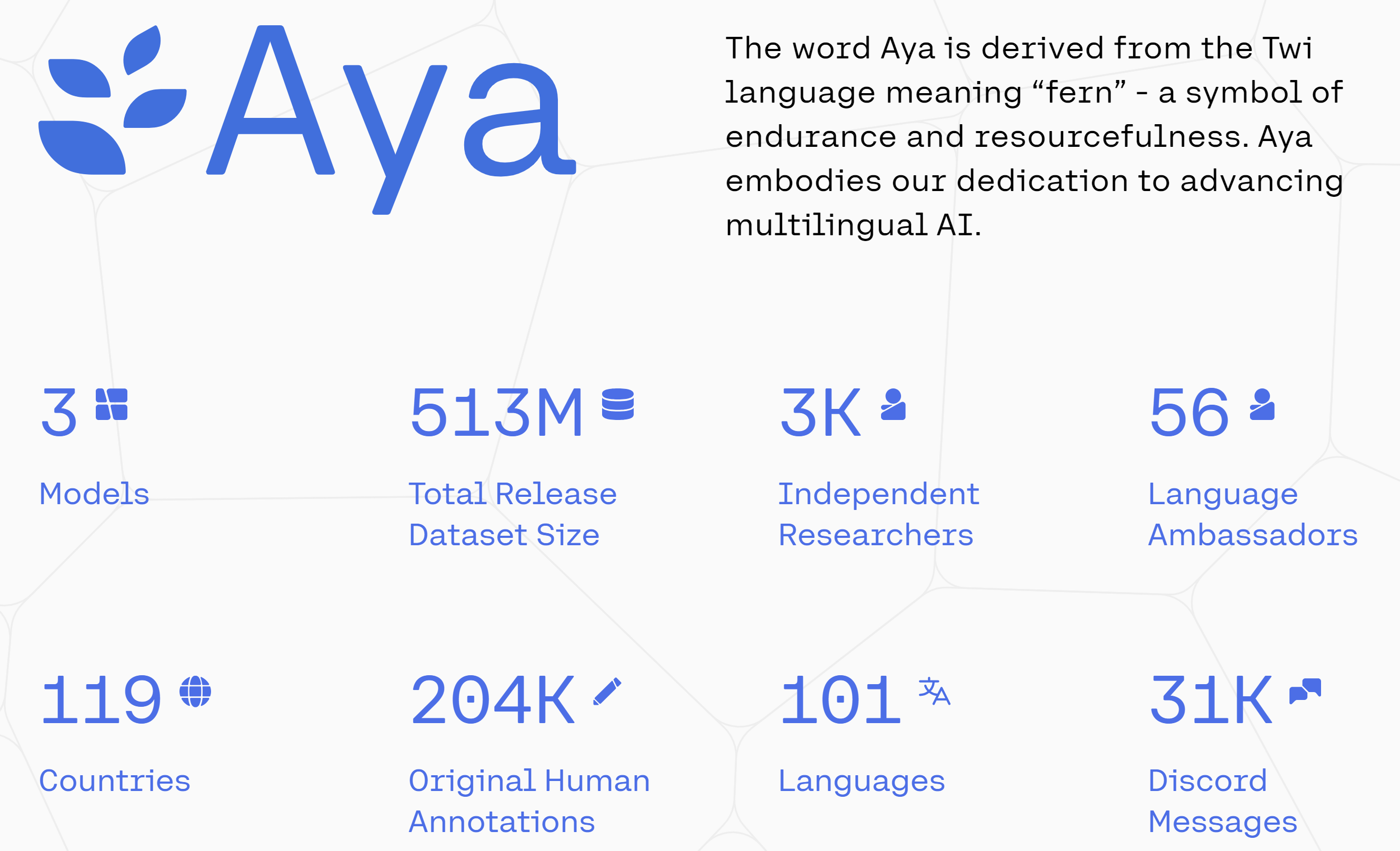

The event of the Aya 23 fashions relies on the Aya dataset, an open supply instruction set assortment comprising 513 million LLM requests and responses in 114 languages. This mission launched by Cohere for “assist help underrepresented languages” introduced collectively 3,000 contributors from all over the world, created the most important multilingual dataset thus far and developed the Aya 101 mannequin, launched as open supply final February.

Whereas Aya 101 coated 101 languages, Cohere goes from breadth to depth with Aya 23 which mixes a high-performance pre-trained mannequin with the Aya assortment to ship strong efficiency in 23 languages (Arabic, Chinese language (Simplified and Conventional) ), Czech, Dutch, English , French, German, Greek, Hebrew, Hindi, Indonesian, Italian, Japanese, Korean, Persian, Polish, Portuguese, Romanian, Russian, Spanish, Turkish, Ukrainian and Vietnamese), thus reaching virtually half of the world’s inhabitants.

The Aya 23 mannequin household relies on a decoder-only transformer structure and relies on Cohere’s flagship Command fashions, the Aya-23-35B on a refined model of the Command R.

Whereas the Aya 23-8B is designed to supply an optimum steadiness between efficiency and useful resource effectivity, the Aya 23-35B is meant for extra complicated use instances and better efficiency wants.

The efficiency of the Aya 23 fashions

Cohere for AI in contrast the efficiency of Aya 23 with massively multilingual open supply fashions resembling Aya-101 and broadly used open weight fashions. It outperforms broadly used fashions resembling Gemma, Mistral 7B Intstruct and Mixtral on a variety of discriminative and generative duties.

The 35B Aya 23 achieved the best outcomes throughout all benchmarks for languages coated, whereas the 8B Aya 23 demonstrated best-in-class multilingual efficiency.

1716887071

#Cohere #launches #Aya #promote #multilingualism