Nvidia Unveils Project Digits: A mini Supercomputer for AI Enthusiasts

Table of Contents

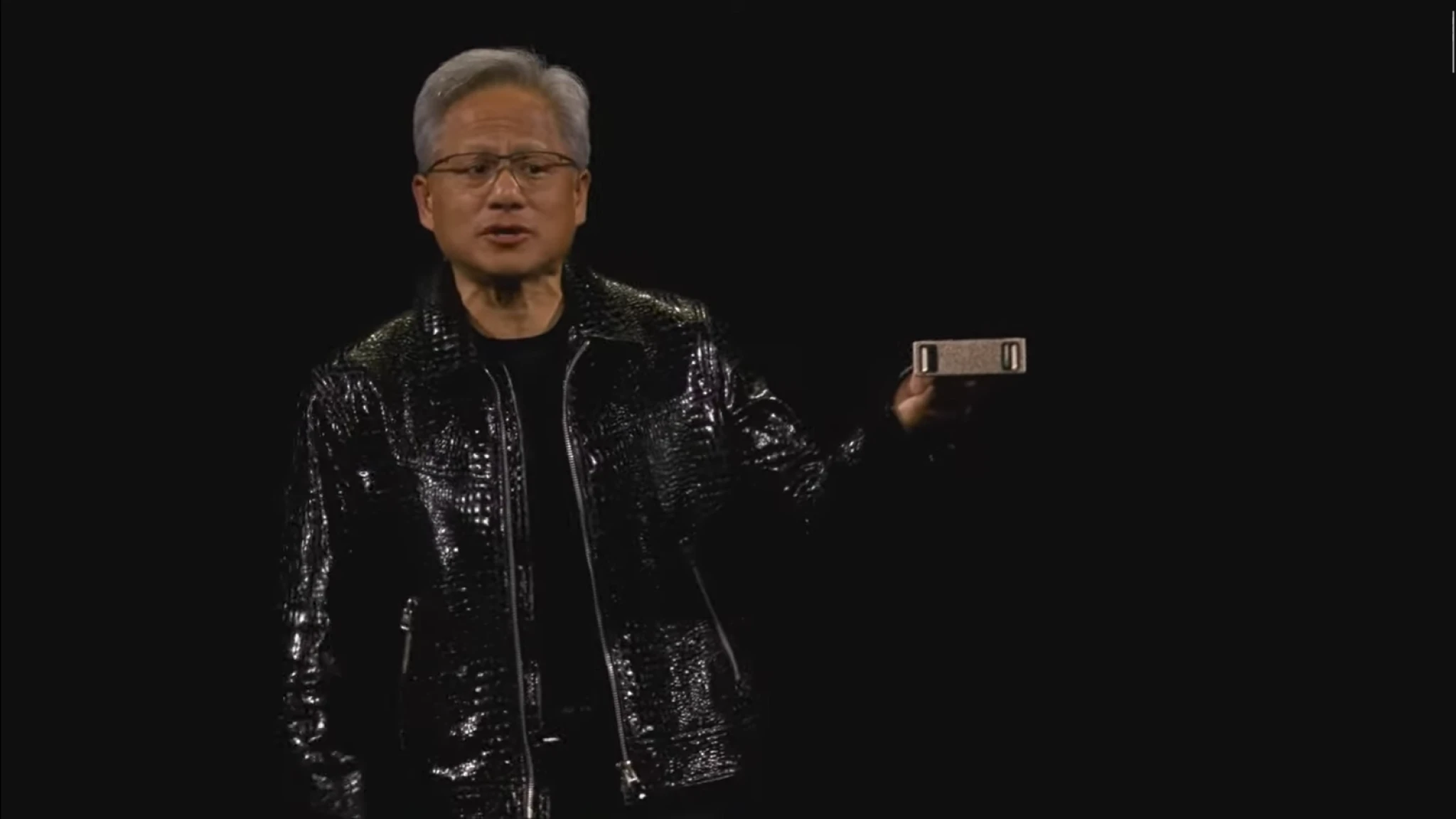

Jensen Huang, CEO of Nvidia, continues to dazzle the tech world wiht his visionary presentations. At CES 2025, he unveiled a revolutionary product that brings the power of supercomputing to the desktop: Nvidia Project Digits. This compact device promises to democratize access to cutting-edge AI technology, opening up new possibilities for developers, researchers, and AI enthusiasts alike.

imagine a world where you can train and experiment with advanced AI models directly on your desk. Nvidia Project Digits makes this a reality. this compact device, resembling a modern Mac mini, packs the punch of a supercomputer, thanks to its groundbreaking GB10 Grace Blackwell superchip.This chip offers up to petaflop computing power, enabling users to run and prototype AI models with up to 200 billion parameters.

But the power doesn’t stop there. Digits also boasts 128 GB of operating memory and up to 4 TB of fast SSD storage, ensuring smooth and efficient operation even for demanding AI workloads.

Accessible AI Powerhouse

while Digits isn’t your typical desktop computer,it’s designed with accessibility in mind.Priced at $3,000, it’s more affordable than traditional supercomputers, though it will initially be available only through “high-end partners.” Huang emphasizes that Digits is intended for developers,researchers,analysts,data scientists,and AI students who need a powerful platform for their work.

Running on Nvidia’s DGX OS, based on Linux, Digits offers a robust habitat for AI development. It seamlessly integrates with existing Windows and Mac computers, making it easy to work with familiar tools and workflows.

For those who need even more processing power, two Digits computers can be connected to function as a single, more powerful unit, capable of handling AI models with up to 405 billion parameters.

A New Era of AI Development

Nvidia Project Digits marks a meaningful step forward in making AI technology more accessible. By putting supercomputing power within reach of a wider audience, Nvidia is empowering a new generation of innovators and researchers to push the boundaries of artificial intelligence. This could lead to breakthroughs in various fields, from healthcare and scientific research to robotics and entertainment.

While the future of AI is still unfolding, Projects like Digits show tremendous promise for accelerating progress and unlocking the transformative potential of artificial intelligence.

Nvidia Unleashes a New Generation of RTX GPUs Powering AI and Gaming

Nvidia CEO Jensen huang kicked off CES 2025 with a bang,unveiling the next generation of RTX GPUs,codenamed “Blackwell”,promising a revolution in both gaming and AI development.

huang highlighted the flagship RTX 5090 series, showcasing its groundbreaking advancements in AI-powered rendering, including elegant neural shaders. While visually similar to its predecessor, the RTX 5090 packs a staggering 92 billion transistors and boasts performance figures that seem almost alien: 4000 AT TOPS, 380 ray-tracing TFLOPS, and a bandwidth of 1.8 TB/s. The company claims this translates to double the performance of the previous generation RTX 4090.

To demonstrate the leap in power,Huang presented a captivating video game graphics demo featuring complex textures and full ray tracing. The results were simply stunning.

Though, this immense power comes at a premium. The GeForce RTX 5090, equipped with 32GB of 512-bit GDDR7 memory and consuming 575 watts, will be available in January for $2000. This marks a $400 increase over the initial launch price of the RTX 4090. For those seeking ultimate portability, a laptop version will be available in March, starting at under $3000.The lower-tier models offer a more attractive price point. The RTX 5080 will retail for $999,while the RTX 5070 Ti and 5070 will be priced at $749 and $549 respectively. interestingly, the RTX 5080 is $200 cheaper than the RTX 4080 at launch, and both the RTX 5070 Ti and RTX 5070 see a $50 price drop. The successors to the entry-level RTX 5060 Ti and RTX 5060 were not announced, suggesting a later release.Nvidia’s aggressive pricing strategy throws down the gauntlet to competitors like AMD and Intel, notably Intel, which focuses on the lower and mid-range graphics market with an emphasis on affordability. This move suggests that Nvidia, already dominant in the market, aims to further solidify its position and potentially squeeze out rivals.

A New Era in AI Training: Nvidia cosmos

Huang’s keynote went beyond just gaming, delving into Nvidia’s aspiring plans for AI development. He introduced Nvidia Cosmos,a collection of foundation models designed to generate synthetic training data in the form of photorealistic videos. These videos can then be used to train AI models and robots, including self-driving cars.

The key benefit of Cosmos lies in its potential to dramatically reduce the costs associated with training AI models using real-world data. Nvidia plans to release Cosmos as open source, following a similar approach taken by Meta with its Llama language models. This move promises to democratize access to powerful AI training tools and accelerate innovation in the field.

What are the broader implications of Project Digits for the AI industry as a whole?

Interview with Dr. Emily Carter, AI Researcher and Lead Developer at InnovateAI Labs, on NVIDIA Project Digits

By Archyde News Editor

Archyde: Thank you for joining us today, Dr. Carter. NVIDIA’s Project Digits has been making waves in the tech world since its unveiling at CES 2025. As a leading AI researcher, what are your thoughts on this groundbreaking device?

Dr. Emily Carter: Thank you for having me. Project Digits is truly a game-changer.For years,the barrier to entry for high-performance AI progress has been the cost and complexity of supercomputing infrastructure. NVIDIA has managed to condense that power into a compact, desktop-kind device. It’s a monumental step toward democratizing AI research and development.

Archyde: The device is powered by the GB10 Grace Blackwell superchip, which offers petaflop-level computing power. How significant is this for AI researchers like yourself?

Dr. Carter: The GB10 chip is a marvel of engineering. Petaflop-level computing in a device this size is unprecedented. For context, training AI models with billions of parameters typically requires massive data centers. With Project Digits, we can now prototype and experiment with models containing up to 200 billion parameters right on our desks. This not onyl accelerates the development cycle but also reduces costs significantly. It’s a win-win for researchers and developers.

archyde: The device also features 128 GB of memory and up to 4 TB of SSD storage. How do these specs enhance its capabilities?

Dr. Carter: Memory and storage are critical for AI workloads. The 128 GB of memory ensures that even the most complex models can be handled efficiently, while the 4 TB SSD storage allows for rapid data access and processing. This combination is essential for tasks like natural language processing, computer vision, and generative AI, where large datasets and high-speed computations are the norm.

Archyde: Project Digits is priced at $3,000, which is significantly more affordable than traditional supercomputers. Do you think this price point will make it accessible to a broader audience?

Dr. Carter: Absolutely. While $3,000 is still a substantial investment, it’s a fraction of the cost of traditional supercomputing setups. This makes it accessible not just to large corporations and research institutions but also to smaller startups, autonomous developers, and even students. NVIDIA’s decision to target this price point is a strategic move to foster innovation across the board.

Archyde: the device runs on NVIDIA’s DGX OS, based on Linux, and integrates seamlessly with windows and Mac systems. How crucial is this compatibility for developers?

Dr. Carter: Compatibility is crucial. Many developers and researchers are already working within established ecosystems on Windows or Mac. the fact that Project Digits integrates seamlessly with these systems means there’s no steep learning curve or need to overhaul existing workflows. This lowers the barrier to adoption and allows users to focus on what they do best—developing cutting-edge AI solutions.

Archyde: One of the standout features is the ability to connect two Digits units to handle models with up to 405 billion parameters. How does this scalability impact AI research?

Dr. Carter: Scalability is a game-changer. Being able to combine two units effectively doubles the computational power, enabling researchers to tackle even more ambitious projects.this is particularly important for fields like healthcare, where AI models are being used to analyze vast amounts of medical data, or in scientific research, where simulations and modeling require immense computational resources.It’s a feature that ensures Project digits can grow with the needs of its users.

Archyde: NVIDIA CEO Jensen Huang has emphasized that Project digits is designed for developers, researchers, and AI students. How do you see this device shaping the future of AI education?

Dr. Carter: Education is one of the most exciting applications of Project Digits. For students, access to such powerful tools can be transformative. It allows them to experiment with real-world AI models and gain hands-on experience that was previously reserved for those with access to high-end computing resources. This could lead to a new generation of AI talent that is better prepared to tackle the challenges of tomorrow.

Archyde: what do you think the broader implications of Project Digits are for the AI industry as a whole?

Dr. Carter: Project Digits represents a shift toward more accessible and decentralized AI development. By putting supercomputing power in the hands of more people, NVIDIA is fostering innovation across industries. We’re likely to see breakthroughs in areas like personalized medicine, autonomous systems, and creative AI applications. It’s an exciting time to be in this field, and Project Digits is at the forefront of this revolution.

Archyde: Thank you, Dr. Carter, for sharing your insights. It’s clear that Project Digits is poised to make a significant impact on the world of AI.

Dr. Carter: Thank you. I’m excited to see how this technology will be used to push the boundaries of what’s possible in AI. The future is radiant, and Project digits is a big part of that.

This interview was conducted by Archyde’s news editor, highlighting the transformative potential of NVIDIA Project Digits in the AI landscape. Stay tuned for more updates on this revolutionary technology.