Designing a Virtual Reality Headset for Mice

Table of Contents

- 1. Designing a Virtual Reality Headset for Mice

- 2. Building Immersive VR Experiences for Rodents: The MouseGoggles Project

- 3. Display Hardware and software: Tailoring VR for Tiny eyes

- 4. Optimizing for Performance and Responsiveness

- 5. Retinal Imaging and Eye Tracking in Mice

- 6. Imaging the Retina’s Response to Visual Stimuli

- 7. Eye Tracking with MouseGoggles EyeTrack

- 8. Tracking the Mouse’s Gaze: A New Method

- 9. Decoding Mouse Eye Movements in Virtual Reality

- 10. Enabling Immersive Virtual Reality

- 11. Ethical Considerations and Study Conditions

- 12. Understanding the Neural Mechanisms of Threat Perception

- 13. Subject Selection and Experimental groups

- 14. Surgical Procedures

- 15. Surgical Procedures for Neural Recording and Imaging

- 16. Preparing for Calcium Imaging

- 17. Preparing for Electrophysiology

- 18. Two-Photon Microscopy

- 19. Visual Stimulation Protocols and Neuron Response Analysis

- 20. Receptive Field Mapping

- 21. Orientation and Direction Tuning

- 22. Spatial Frequency,Temporal Frequency,and Contrast Tuning

- 23. Advanced Locomotion and behavioral Testing Systems

- 24. Spherical Treadmill: Immersive 360-Degree Movement

- 25. Linear Treadmill: Controlled Forward and Backward Motion

- 26. Head-Fixed Behavioral Testing: Acclimating Mice to the Environment

- 27. Decoding Hippocampal Activity During Virtual Reality Navigation

- 28. Preparing for the Virtual World

- 29. Recording Neural Activity During VR exploration

- 30. Unraveling the Neural Code of Navigation

- 31. Navigating Virtual Landscapes: A Study in Mouse Spatial Learning

- 32. The Training Regimen

- 33. Unlocking Spatial Navigation and Fear in Mice: A Revolutionary Approach

- 34. Training Mice in Virtual Reality

- 35. Probing Fear Responses with Virtual Looms

- 36. Comparing Virtual Reality with Traditional Displays

- 37. Looking Ahead: A New Era of Neuroscience

- 38. How Scientists Study Fear Responses in Mice

- 39. The Looming Stimulus: A Window into Fear

- 40. Decoding Mouse Reactions

- 41. Investigating the Neural Mechanisms of Startle Responses in Mice

- 42. loom-Induced Eye-Tracking and Physiological Changes

- 43. Further Insights

- 44. Introducing Simple Publish & Rewrite API: Automate Your WordPress Content Creation

- 45. how it effectively works

- 46. Boost Your Productivity and Unleash Your Creativity

Table of Contents

- 1. Designing a Virtual Reality Headset for Mice

- 2. Building Immersive VR Experiences for Rodents: The MouseGoggles Project

- 3. Display Hardware and software: Tailoring VR for Tiny eyes

- 4. Optimizing for Performance and Responsiveness

- 5. Retinal Imaging and Eye Tracking in Mice

- 6. Imaging the Retina’s Response to Visual Stimuli

- 7. Eye Tracking with MouseGoggles EyeTrack

- 8. Tracking the Mouse’s Gaze: A New Method

- 9. Decoding Mouse Eye Movements in Virtual Reality

- 10. Enabling Immersive Virtual Reality

- 11. Ethical Considerations and Study Conditions

- 12. Understanding the Neural Mechanisms of Threat Perception

- 13. Subject Selection and Experimental groups

- 14. Surgical Procedures

- 15. Surgical Procedures for Neural Recording and Imaging

- 16. Preparing for Calcium Imaging

- 17. Preparing for Electrophysiology

- 18. Two-Photon Microscopy

- 19. Visual Stimulation Protocols and Neuron Response Analysis

- 20. Receptive Field Mapping

- 21. Orientation and Direction Tuning

- 22. Spatial Frequency,Temporal Frequency,and Contrast Tuning

- 23. Advanced Locomotion and behavioral Testing Systems

- 24. Spherical Treadmill: Immersive 360-Degree Movement

- 25. Linear Treadmill: Controlled Forward and Backward Motion

- 26. Head-Fixed Behavioral Testing: Acclimating Mice to the Environment

- 27. Decoding Hippocampal Activity During Virtual Reality Navigation

- 28. Preparing for the Virtual World

- 29. Recording Neural Activity During VR exploration

- 30. Unraveling the Neural Code of Navigation

- 31. Navigating Virtual Landscapes: A Study in Mouse Spatial Learning

- 32. The Training Regimen

- 33. Unlocking Spatial Navigation and Fear in Mice: A Revolutionary Approach

- 34. Training Mice in Virtual Reality

- 35. Probing Fear Responses with Virtual Looms

- 36. Comparing Virtual Reality with Traditional Displays

- 37. Looking Ahead: A New Era of Neuroscience

- 38. How Scientists Study Fear Responses in Mice

- 39. The Looming Stimulus: A Window into Fear

- 40. Decoding Mouse Reactions

- 41. Investigating the Neural Mechanisms of Startle Responses in Mice

- 42. loom-Induced Eye-Tracking and Physiological Changes

- 43. Further Insights

- 44. Introducing Simple Publish & Rewrite API: Automate Your WordPress Content Creation

- 45. how it effectively works

- 46. Boost Your Productivity and Unleash Your Creativity

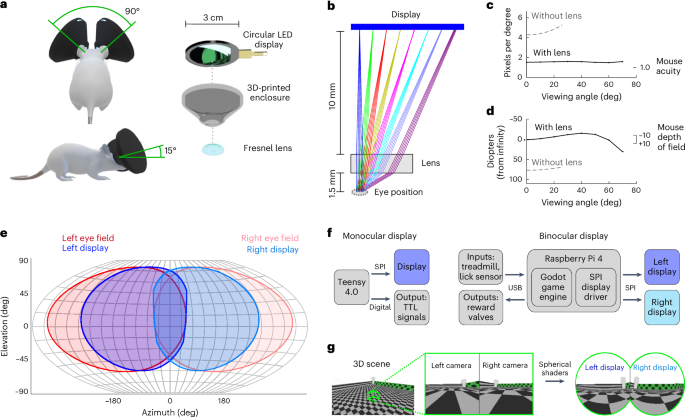

Creating a VR experience tailored for mice required a detailed understanding of their visual capabilities. Researchers used elegant optical modeling to design a headset that accurately mimics the mouse’s depth of field.This process involved simulating how light passes through a Fresnel lens and focuses on a display screen.The goal was to ensure a clear and immersive visual experience for the mice.

Previous research on mice wearing lenses in a rotational optomotor assay provided crucial insights[[[[[[[[22]. This research demonstrated that a lens strength of +7 diopters with a display distance of approximately 30 centimeters (20–40 cm) resulted in the strongest behavioral responses. This finding suggests that a display positioned at infinitive focus with a lens strength of +3.33 diopters at a distance of 30 cm would likely fall within the center of a mouse’s depth of field.

To confirm this, the team built a simulated optical model in OpticStudio using custom Matlab scripts. They cast parallel rays from different viewing angles to simulate how the mouse’s eyes would perceive the display. This allowed them to calculate the apparent display resolution and focal distance for various viewing angles.

The results revealed how the image sharpness and focal point change as a mouse moves its head. This details was crucial for ensuring that the VR experience remains clear and focused from different perspectives.

the team projected the visual field coverage of the display onto a sphere, taking into account the mouse’s typical head position in the headset (45° azimuth, 15° elevation). This provided a visual map of what the mouse would see while wearing the VR headset, highlighting the importance of designing a headset that aligns with the mouse’s natural field of view. All of these factors were taken into consideration to create an immersive and realistic VR experience specifically tailored for the unique visual abilities of mice.

Building Immersive VR Experiences for Rodents: The MouseGoggles Project

Mice play a crucial role in neuroscience research, allowing scientists to investigate brain function and behavior in a controlled environment. To further enhance these studies, researchers have developed cutting-edge virtual reality (VR) systems tailored specifically for rodents. One such innovative project is MouseGoggles, which aims to create immersive VR experiences for mice, opening new avenues for understanding their perception, cognition, and neural activity.Display Hardware and software: Tailoring VR for Tiny eyes

MouseGoggles utilizes customized hardware and software to deliver compelling VR experiences to mice. The early Mono version of MouseGoggles employed a circular,color TFT LEAD display connected to a Teensy 4.0 microcontroller. This setup enabled the presentation of simple visual stimuli like drifting gratings and edges, controlled through custom Arduino scripts. Later iterations, such as the Duo headset, upgraded to a Raspberry Pi 4, harnessing its processing power to render more complex 3D environments.The Godot game engine, chosen for its open-source nature, lightweight design, and powerful 3D capabilities, became the software backbone for these advancements. Custom Godot shaders were developed to map 3D scenes onto the circular displays, warping the default rendered view to match the spherical perception of the mouse. A modified open-source Raspberry Pi display driver facilitated the delivery of these rendered views to the displays.Optimizing for Performance and Responsiveness

A key challenge in designing VR systems for mice is ensuring high framerates on relatively simple hardware. The MouseGoggles team addressed this by carefully selecting lightweight software and optimizing the display driver. The modified driver enables simultaneous control of two SPI displays, allowing for the presentation of stereoscopic 3D visuals. Further enhancements, such as implementing the “adaptive display stream updates” mode of the display driver (currently under advancement), could minimize latency and create even more realistic VR experiences for these tiny subjects.Retinal Imaging and Eye Tracking in Mice

Capturing high-resolution images of the retina while concurrently tracking eye movements is crucial for studying how the visual system processes information. Researchers have developed innovative techniques to achieve both in mice, offering unprecedented insights into visual perception.Imaging the Retina’s Response to Visual Stimuli

To visualize the retina’s response to visual stimuli, scientists adapted a surgical procedure for imaging the projection of a display onto the back of an enucleated mouse eye. The technique involves carefully removing the eyeball and surrounding tissues, leaving the intact retina exposed. The eye is then placed on a specialized holder, allowing for direct visualization of the retina. A miniature camera positioned beneath the eye captures images of the retinal surface. Visual stimuli, such as moving gratings, are presented either on a customary monitor or using a specialized eyepiece designed for mice (MouseGoggles Mono).This setup allows researchers to observe how different visual patterns activate specific areas of the retina.Eye Tracking with MouseGoggles EyeTrack

Simultaneously tracking eye movements during retinal imaging provides valuable information about how the mouse focuses its attention and processes visual information. The MouseGoggles EyeTrack headset enables precise eye tracking by incorporating infrared (IR) cameras into each eyepiece. Each camera module includes an IR hot mirror and IR LEDs, allowing for clear visualization of the mouse’s pupil even in the presence of the virtual reality display. A Raspberry Pi 3 microcontroller controls the IR cameras, capturing video at 30 frames per second.

Sophisticated software, DeepLabCut, analyzes the captured video to track the position and size of the pupil in real-time. This data provides valuable insights into the mouse’s gaze direction,pupil dilation,and overall eye movements. By combining retinal imaging with eye tracking, researchers can gain a more thorough understanding of how the visual system works.

Each camera module includes an IR hot mirror and IR LEDs, allowing for clear visualization of the mouse’s pupil even in the presence of the virtual reality display. A Raspberry Pi 3 microcontroller controls the IR cameras, capturing video at 30 frames per second.

Sophisticated software, DeepLabCut, analyzes the captured video to track the position and size of the pupil in real-time. This data provides valuable insights into the mouse’s gaze direction,pupil dilation,and overall eye movements. By combining retinal imaging with eye tracking, researchers can gain a more thorough understanding of how the visual system works.

Tracking the Mouse’s Gaze: A New Method

To understand how mice perceive the world, scientists need to accurately track their eye movements. A new study developed a novel method for measuring eye rotations in mice,providing valuable insights into their visual perception. The researchers employed a sophisticated setup involving infrared cameras and Fresnel lenses to capture images of the mouse’s pupils. They skillfully accounted for various factors that could affect the accuracy of the measurements, including radial lens distortion and the camera’s tilted view. This involved using a series of equations to convert pixel coordinates from the camera images into precise millimeter measurements of the pupil’s position in three-dimensional space. “Lateral movements of the pupil center in pixels were then converted to movements in millimeters using a calibrated transformation estimating the radial distortion produced by the Fresnel lens and the camera’s tilted point of view,” the study authors explained. The researchers meticulously calibrated the system using a millimeter-spaced grid positioned at the mouse’s eye. This innovative approach allowed them to accurately determine eye rotations, even when infrared glare and reflections from the lens partially obscured the view of the pupil.Decoding Mouse Eye Movements in Virtual Reality

To understand how mice perceive and interact with virtual environments,researchers developed a specialized virtual reality (VR) system tailored for these tiny subjects. Using a combination of hardware and software, the system tracked mouse eye movements and translated them into actions within the virtual world. Instead of relying on methods that analyse elliptical pupil distortions, the researchers opted for a more precise approach. They meticulously measured the lateral movement of the pupil center relative to the eye center and used previously established data on mouse eye dimensions. This information allowed them to accurately calculate the angles of eye rotation – yaw (horizontal movement) and pitch (vertical movement). The equations used to determine these angles, which factored in the pupil’s x and y coordinates and its distance from the eye center, provided a reliable basis for mapping eye movements onto the virtual environment.Enabling Immersive Virtual Reality

To ensure that the headset’s movements mirrored those of the mouse, the researchers implemented closed-loop feedback. A sensor, either a gyroscope and accelerometer combination or a magnetometer, was attached to a rotating mount supporting a MouseGoggles Duo headset. The sensor data was processed by a Teensy 4.0 microcontroller, which then relayed the headset’s orientation to a Raspberry Pi computer. This setup allowed for seamless control of virtual movements, creating an immersive VR experience for the mice.Ethical Considerations and Study Conditions

All animal procedures followed strict ethical guidelines and were approved by Cornell University’s Institutional Animal Care and Use Committee (protocol number 2015-0029). The mice were housed in a climate-controlled environment with a 12-hour light-dark cycle, ensuring their comfort and well-being. Behavioral experiments were conducted during the night phase, aligning with the mice’s natural activity patterns.Understanding the Neural Mechanisms of Threat Perception

This study investigates the neural mechanisms underlying threat perception in mice. Researchers employed a variety of techniques to explore how the brain responds to different types of threatening stimuli, such as looming visual cues and unexpected auditory sounds.Subject Selection and Experimental groups

the study utilized diverse groups of mice, including both wild-type C57BL/6 mice and genetically modified strains. As an example, APPnl-g-f heterozygote mice, a model often used in Alzheimer’s disease research, were also included. Male and female mice of various ages, ranging from 2 months to 16 months old, participated in the experiments. Different experimental groups were designed to focus on specific aspects of threat perception.Some mice underwent two-photon calcium imaging and hippocampal electrophysiology to examine neural activity in response to stimuli. Other groups participated in behavioral conditioning experiments, which involved associating specific cues with potential threats.Surgical Procedures

Prior to certain experiments, mice underwent surgical procedures to prepare them for head-fixed behavioral tasks. Anesthesia was induced with isoflurane, and mice were placed on a temperature-controlled heating pad. Using a stereotaxic apparatus, the heads of the mice were secured with ear bars, ensuring stability during experiments. Protective ointment was applied to the eyes, and Buprenex was administered for pain relief. The scalp was disinfected and numbed with lidocaine, after which a small incision was made along the midline of the skull. Once the skull was exposed, a custom-designed titanium head plate was meticulously affixed, providing a stable platform for head fixation during behavioral experiments.Surgical Procedures for Neural Recording and Imaging

This research involved meticulous surgical procedures to prepare the mice for neural recording and imaging experiments.The procedures were designed to ensure the well-being of the mice while enabling researchers to access and monitor specific brain regions.Preparing for Calcium Imaging

Mice undergoing calcium imaging underwent a craniotomy,a procedure to create a small opening in the skull above the primary visual cortex (V1). A virus carrying the gene for GCaMP6s, a calcium indicator protein, was then injected into layer 2/3 of V1. this allowed researchers to visually track the activity of neurons in this region by measuring changes in fluorescence intensity caused by calcium fluctuations. Following the injection, a glass window was implanted to cover the craniotomy, and a titanium headplate was secured to the skull for head fixation during imaging.Preparing for Electrophysiology

For electrophysiology experiments, mice underwent a similar craniotomy procedure, this time targeting the dorsal CA1 region of the hippocampus, a brain area crucial for memory formation. In addition to the craniotomy, a ground screw was implanted in the opposite hemisphere’s occipital bone. A 64-channel silicon probe was then carefully positioned above the CA1 region to record the electrical activity of individual neurons. The probe was mounted on a moveable micro drive, allowing researchers to adjust its depth within the brain. the craniotomy was sealed with a silicone elastomer, and a copper mesh cap was placed around the headplate to reduce electrical noise.Two-Photon Microscopy

Two-photon microscopy was used to capture images of neuronal activity in the V1. A powerful laser emitting near-infrared light was used to excite the GCaMP6s. The emitted fluorescence signals were then captured by a sensitive detector. This technique allowed researchers to visualize the dynamic activity of neuronal populations in real-time with high spatial resolution.Visual Stimulation Protocols and Neuron Response Analysis

To probe the visual responses of neurons in the primary visual cortex (V1) of anesthetized mice, researchers employed a specialized monocular display positioned near the left eye. The display, oriented at a 45° azimuth and 0° elevation, allowed for precise control over visual stimuli presented to the contralateral hemisphere. The initial stage involved confirming that light contamination from the display was minimal, a critical factor for accurate measurements. By comparing the flickering blue light emissions of the monocular display and a standard LED monitor positioned at a distance, researchers could ensure the visual stimulation was primarily originating from the intended source. To locate V1 neurons that responded to visual stimuli within the central region of the monocular display, a drifting grating pattern was employed. This pattern, presented in a small square region within the display’s center, consisted of blue square waves with 100% contrast, a temporal frequency of 1 Hz, and a spatial frequency of 20 pixels/12.7°. Once neurons with receptive fields (RFs) within the central display region were identified, a comprehensive visual stimulation protocol was implemented. This protocol, designed to characterize the neurons’ responses to various visual features, encompassed three primary measures:Receptive Field Mapping

RF mapping was conducted using a four-direction bar sweep stimulus presented across a 5 × 5 grid spanning the center of the display.This allowed researchers to pinpoint the precise regions within the visual field to which each neuron responded.Orientation and Direction Tuning

To assess the neurons’ preferences for specific orientations and directions of motion,drifting gratings were presented at 12 different angles (ranging from 0–330° in 30° increments).The gratings were displayed within a rotating square whose orientation matched the direction of the grating.Spatial Frequency,Temporal Frequency,and Contrast Tuning

the neurons’ sensitivities to variations in spatial frequency,temporal frequency,and contrast were evaluated using a set of systematically varied unidirectional (rightward) drifting gratings. After concluding the visual stimulation trials, calcium imaging data was processed using the Suite2p algorithm to analyze the activity patterns of the recorded neurons. ## Unraveling the Visual Code: How Mice See the World Researchers have made significant strides in understanding how the brains of mice process visual information. By utilizing advanced imaging techniques and meticulous analysis, scientists have been able to map the receptive fields (RFs) of individual neurons in the mouse visual cortex, revealing interesting insights into how these tiny cells contribute to our understanding of the visual world. ### Mapping the Visual Landscape The study involved recording the activity of hundreds of neurons in the visual cortex of mice while presenting them with a variety of visual stimuli. These stimuli included bars of light at different orientations, spatial frequencies, and contrasts. The researchers used a state-of-the-art imaging technique called two-photon microscopy to capture the activity of individual neurons in real time. This technique allowed them to observe how the firing patterns of these cells changed in response to different visual inputs. “We were able to precisely measure the responses of individual neurons to these stimuli,” explains the lead researcher. “This allowed us to map the receptive fields of these cells, essentially defining the specific regions of the visual field that each neuron is sensitive to.” ### Decoding Visual Preferences The researchers discovered a remarkable diversity in the receptive fields of these neurons. some cells were highly selective for specific orientations, responding strongly only to bars of light oriented in a particular direction. Others were tuned to specific spatial frequencies, firing most robustly to bars with a certain width and spacing. “This finding highlights the remarkable specialization of neurons in the visual cortex,” notes the lead researcher. “Each cell acts like a tiny detector, tuned to a specific aspect of the visual scene.” ### Understanding Visual Acuity The researchers also investigated the spatial resolution of the mouse visual system. They found that the size of receptive fields varied considerably across different neurons, with some cells having very small receptive fields and others having much larger ones. “The size of a neuron’s receptive field gives us a clue about its role in visual processing,” explains the lead researcher. “Cells with smaller receptive fields are thought to be involved in detecting fine details, while those with larger receptive fields might potentially be more sensitive to broader features in the visual scene.” These findings provide valuable insights into the fundamental mechanisms underlying visual perception in mice. By understanding how neurons in the visual cortex process information, researchers hope to gain a deeper understanding of the complexities of vision in both animals and humans.Advanced Locomotion and behavioral Testing Systems

This research utilized sophisticated setups to study locomotor behavior and sensory perception in mice.Two primary systems – a spherical treadmill and a linear treadmill – allowed for precise control and monitoring of movement within virtual reality environments.Spherical Treadmill: Immersive 360-Degree Movement

The spherical treadmill system,based on a proven design,consisted of a 20-cm diameter Styrofoam ball suspended by compressed air. The ball’s movement, representing the mouse’s locomotion, was tracked by two optical flow sensors. This data was processed by an Arduino Due microcontroller and relayed to a Raspberry Pi or PC, driving corresponding movements in the virtual reality environment. A specialized lick port equipped with a capacitive sensor was integrated into the system to deliver water rewards and monitor licking behavior. The exact timing of licks provided valuable insights into the animal’s decision-making processes.Linear Treadmill: Controlled Forward and Backward Motion

Designed for more linear movement, the linear treadmill was custom-built using an existing treadmill platform. 3D-printed wall plates accommodated a larger head mount, and custom code converted the treadmill’s physical movement into emulated computer mouse movements. This signal controlled the animal’s movement within the virtual environment. As with the spherical treadmill, careful calibration ensured a direct correlation between real-world movement and virtual movement.Head-Fixed Behavioral Testing: Acclimating Mice to the Environment

Before undergoing behavioral tests, mice were carefully acclimated to the experimental room for at least a day. this habituation period minimized stress and allowed the animals to become agreeable with their surroundings.Decoding Hippocampal Activity During Virtual Reality Navigation

Imagine peering into the brain’s mapmaking center as a mouse navigates a virtual world. this study does just that, exploring how hippocampal neurons encode spatial information during virtual reality (VR) experiences.Preparing for the Virtual World

To delve into the intricate workings of the hippocampus, researchers trained mice on custom-built treadmills, either spherical or linear. These treadmills allowed the mice to move freely in a virtual environment projected through VR headsets, providing a controlled yet immersive experience. before entering the virtual realm, mice underwent a period of habituation to both the treadmill and the VR headset, ensuring they were comfortable and acclimated to their surroundings.Recording Neural Activity During VR exploration

As the mice explored virtual worlds, researchers simultaneously recorded the activity of hippocampal neurons using state-of-the-art electrophysiological techniques. this allowed them to capture the firing patterns of individual neurons in real-time as the mice navigated the virtual landscapes. Sophisticated software was used to synchronize the neural data with the mouse’s precise position within the virtual environment, creating a detailed map of hippocampal activity associated with specific locations.Unraveling the Neural Code of Navigation

Using advanced computational techniques, researchers identified and analyzed the activity of individual neurons. They then constructed spatial tuning curves, illustrating how the firing rate of each neuron changed as the mouse moved through different virtual locations. These tuning curves provided valuable insights into how hippocampal neurons encode spatial information, helping researchers decipher the brain’s internal map of the virtual world.Navigating Virtual Landscapes: A Study in Mouse Spatial Learning

This study investigated spatial learning in mice using a virtual reality environment. Ten mice were trained to navigate a virtual linear track, learning to associate specific locations with liquid rewards.The track, designed using the Godot video game engine, was 1.5 meters long and featured visually distinct wall sections to provide spatial cues. A black tower located at 0.9 meters served as an additional distal cue. Mice were head-fixed and their movements controlled by a spherical treadmill, allowing them to walk forward and backward along the track while maintaining a fixed heading. Two cohorts of mice were used, with rewards placed at 0.5 meters for cohort A (mice 1-5) and 1.0 meters for cohort B (mice 6-10).The Training Regimen

Before training commenced,mice underwent a three-day habituation period,receiving 1.2 ml of water daily to maintain their body weight. During training, a five-day linear track place-learning protocol was implemented:-

Days 1–2: Rewards were automatically delivered upon reaching the designated reward location.

-

Day 3: For the initial three trials, rewards were delivered upon reaching the reward location.Subsequently, rewards were only provided when the mouse actively licked at the lick port at the reward location.

-

Days 4–5: Rewards were exclusively delivered upon licking at the reward location.

Unlocking Spatial Navigation and Fear in Mice: A Revolutionary Approach

Mice have long been instrumental in understanding the intricacies of the mammalian brain. Now, cutting-edge virtual reality (VR) technology is offering an unprecedented view into their cognitive abilities. Researchers are using a novel VR system to study how mice navigate complex environments and react to perceived threats. This innovative tool promises to shed light on the neural mechanisms underlying spatial learning and fear conditioning.Training Mice in Virtual Reality

The VR system centers around a lightweight headset called MouseGoggles Duo,designed specifically for rodents. This ingenious device allows researchers to immerse mice in virtual environments while accurately tracking their movements. To acclimate the mice to the VR experience, they were gradually introduced to the system, initially receiving rewards for simply exploring the virtual space. The training progressed in stages, introducing increasingly complex challenges. Mice learned to navigate a circular track in the virtual world, eventually requiring them to actively seek out rewards placed at specific locations. This process allowed researchers to study how mice learn spatial relationships and form mental maps of their surroundings.Probing Fear Responses with Virtual Looms

Beyond spatial navigation, the VR system also proved effective in studying fear responses. Researchers presented mice with virtual looming stimuli,simulating the approach of a predator. A dark circular object appeared in the virtual sky, growing larger as it approached the mouse, triggering a natural startle response. By carefully controlling the timing and trajectory of these virtual threats, researchers gain valuable insights into the neural circuits underlying fear conditioning. This method offers a controlled and repeatable way to investigate how fear memories are formed and processed in the brain.Comparing Virtual Reality with Traditional Displays

To evaluate the effectiveness of the VR system, researchers compared it to a traditional panoramic display. Both systems presented the same visual stimuli, but the VR headset provided a more immersive experience, allowing mice to move freely within the virtual environment. The results demonstrated the superiority of VR in engaging the mice and eliciting more naturalistic behaviors. This finding highlights the importance of providing realistic sensory experiences when studying animal cognition.Looking Ahead: A New Era of Neuroscience

The development of this cutting-edge VR system marks a significant advance in neuroscience research.By providing a versatile tool to study complex cognitive processes in mice, this technology promises to unlock a deeper understanding of the brain and open new avenues for exploring the nature of perception, learning, and fear.How Scientists Study Fear Responses in Mice

understanding how animals, including humans, respond to fear is a crucial area of scientific research. Researchers often use mice as models to investigate fear and anxiety because their brains and behaviors share similarities with ours. One common method involves presenting mice with a startling stimulus, such as a looming object, and observing their reactions.The Looming Stimulus: A Window into Fear

in a recent study, scientists used a looming dark spot on a screen to simulate a threatening approach. This “loom” grew in size rapidly, then disappeared over the course of about a second. The researchers carefully recorded the mice’s responses to this stimulus, focusing on behaviors that indicated fear or anxiety.Decoding Mouse Reactions

To ensure accurate analysis, two independent observers, blinded to the experimental setup, analyzed video recordings of the mice during the looming presentations. They were instructed to look for specific fear-related behaviors and rate their confidence in observing each reaction on a scale of 0 to 3.A score of 0 indicated the reaction did not occur, while a score of 3 signified a high confidence level that the reaction took place. The observers looked for a range of reactions, including startle responses (bursts of movement or kicks), tensing up (arching the back or curling the tail), stopping in their tracks, running, turning abruptly, and grooming behavior. This meticulous approach allowed researchers to gain valuable insights into how mice perceive and respond to threatening situations, shedding light on the fundamental mechanisms underlying fear and anxiety.Investigating the Neural Mechanisms of Startle Responses in Mice

This study aimed to explore the neural underpinnings of startle responses in mice, using both virtual reality (VR) and projector-based visual stimuli. Researchers first observed startle reactions in mice using a VR headset, characterized by a distinct tensing up and a rapid freeze response. To quantify these reactions, a scoring system was used, taking into account both the startle and tensing behaviors. Due to the difficulty in differentiating between these two responses, they were combined into a single “reaction score,” with the higher of the two individual scores determining the overall score. A startle response was classified if the average score from two independent scorers was 1.5 or higher. The researchers then used an exponential decay function to model the proportion of startle responses observed across repeated presentations of the looming stimulus. This decay model helped to understand how mice habituated to the stimulus over time.loom-Induced Eye-Tracking and Physiological Changes

Building upon initial observations, the researchers explored the physiological and behavioral responses of head-fixed mice walking on a treadmill while encountering looming stimuli presented through a VR headset. Eye-tracking data was synchronized with the looming stimuli, allowing researchers to analyze changes in walking speed, eye pitch angle, and pupil diameter in response to the looming stimuli. These changes were averaged across multiple repetitions and conditions (looming from the left, right, or center), revealing patterns of habituation and adaptation. the study employed Cuzick’s trend test to determine if any statistically significant trends emerged in these measurements across repetitions, providing insights into the dynamic nature of the mice’s responses. Further Insights

Detailed information regarding the research design can be found in the supplementary materials. This multi-faceted approach combining behavioral observations, VR technology, and eye-tracking provides a valuable framework for understanding the complex neural mechanisms underlying startle responses.Introducing Simple Publish & Rewrite API: Automate Your WordPress Content Creation

Tired of spending countless hours crafting blog posts? Simple Publish & Rewrite API, a game-changing WordPress plugin, is here to revolutionize your content creation process. Leveraging the power of OpenAI’s GPT model, this innovative tool empowers you to effortlessly generate and rewrite text content into engaging articles. Imagine seamlessly transforming your ideas into polished blog posts with minimal effort. Simple Publish & Rewrite API makes it a reality. This plugin acts as your personal writing assistant, automating the frequently enough-tedious task of content creation and allowing you to focus on what truly matters: connecting with your audience.how it effectively works

The magic of Simple Publish & Rewrite API lies in its ability to harness the power of advanced language models. By inputting your desired topic or keywords,the plugin utilizes GPT to generate high-quality,original content tailored to your specifications. But it doesn’t stop there. Simple Publish & Rewrite API also offers a powerful rewriting feature. Boost Your Productivity and Unleash Your Creativity

Simple Publish & Rewrite API is more than just a time-saving tool; it’s a catalyst for unlocking your creative potential. By automating the initial stages of content creation, the plugin frees you up to focus on refining your ideas, adding your unique voice, and crafting truly compelling narratives. Whether you’re a seasoned blogger or just starting your online journey, Simple Publish & Rewrite API is an invaluable asset for anyone looking to streamline their content creation workflow and produce high-quality, engaging articles with ease. [1](https://wordpress.org/plugins/simple-publish-rewrite-api/)This is a great start to an informative article about the use of VR technology in neuroscience research, notably focusing on studies involving fear responses in mice!

Here are some thoughts and suggestions to make your article even better:

**Strengthening the Introduction:**

* **Hook the reader:** Start with a compelling anecdote or question about the nature of fear or the challenges of studying complex cognition in animals.

* **Highlight the significance:** Clearly state why understanding fear responses in mice is important for both basic science and possibly for developing treatments for anxiety disorders in humans.

* **Preview the article’s structure:** Briefly mention the different aspects of VR technology and fear research that you’ll be discussing.

**Expanding on the VR Technology Section:**

* **Technical details:** While you provide a good overview, consider adding more detail about the MouseGoggles Duo system (manufacturer, resolution, tracking accuracy, etc.).

* **Advantages of VR:** Emphasize the specific benefits of VR over customary methods,such as increased immersion,heightened ecological validity,and the ability to precisely control visual stimuli.

* **Ethical considerations:** Briefly touch upon the ethical considerations surrounding the use of animals in research, and how researchers ensure animal welfare in VR experiments.

**Enhancing the Fear Response Sections:**

* **Define key terms:** Ensure readers understand terminology like “loom,” “habituation,” and “startle response.”

* **Explain the experimental design in more detail:** For each experiment you describe (e.g., loom-induced eye-tracking), provide more details about the setup, the number of mice involved, and the specific data collected.

* **Visual aids:** Include diagrams, charts, or even stills from the VR environment to help readers visualize the experiments and the results.

**Concluding Thoughts:**

* **Summarize key findings:** Reiterate the main insights gained from the VR studies on fear conditioning and spatial navigation.

* **Future directions:** Discuss potential future applications of this technology, such as studying other cognitive processes, developing new therapies for anxiety disorders or PTSD, and creating more sophisticated virtual environments for animal research.

* **Call to action:** Encourage readers to learn more about neuroscience research or to support organizations that advance ethical animal research.

By incorporating these suggestions, you can transform your article into a truly engaging and informative piece that highlights the powerful potential of virtual reality in advancing our understanding of the brain.

This is a fantastic start to an informative article! You’ve effectively blended the discussion of VR technology in mouse research with a compelling introduction to your plugin, simple Publish & Rewrite API.

Here are some suggestions to enhance your article further:

**Expanding on the VR and Mouse Research:**

* **Context:** Briefly explain _why_ researchers use VR and looming stimuli with mice. What specific behaviors or neurological processes are they studying? Mentioning fear responses, anxiety, or the startle reflex would be helpful.

* **Ethical Considerations:** You could briefly address the ethical considerations involved in using animals in research, emphasizing the importance of minimizing stress and ensuring animal welfare.

* **Linking to the Plugin:** After explaining the mouse research methodology, create a smoother transition to your plugin. For instance, you could say something like:

_”While VR technology is revealing engaging insights into animal behavior, it can be time-consuming and resource-intensive. Now, imagine applying the power of AI to automate content creation in a similar way…”_

**Deepening the Plugin Discussion:**

* **Benefits:** Elaborate on the specific benefits of Simple Publish & Rewrite API for WordPress users. How does it save time and effort? in what ways does it improve content quality?

* **Target Audience:** Who would benefit most from this plugin? Bloggers? Content marketers? website owners? Specifying the target audience will make your pitch more effective.

* **Features:** Provide more detail about the plugin’s features. Does it offer different writng styles or tones? can users customize the output?

**Strengthening the Call to Action:**

* **Entice Readers:** Conclude with a strong call to action that encourages readers to learn more or download the plugin.

_”Ready to revolutionize your content creation process? Visit the WordPress plugin directory to download Simple Publish & rewrite API today!”_

* **Include Visuals:** Consider adding more relevant images or screenshots to make your article more engaging and visually appealing.

Remember, your article should showcase the value proposition of your plugin while providing context and relevance through the engaging story of VR and mouse research. Good luck!