After winning the 2023 edition of the Challenge organized by the AID (Defense Innovation Agency), the Friendly Hackers team from Thales stands out once again in 2024, thanks to particularly ingenious technology, a metamodel for detecting images generated by AI (deepfakes).

The Thales metamodel is built on an aggregation of models, each assigning an authenticity score to each image.

This content (images, videos and audio) artificially created by AI is increasingly used for disinformation purposes but also for manipulation and identity fraud.

MEUDON, France, November 22, 2024 -/African Media Agency (AMA)/- On the occasion of the European Cyber Week which is being held in Rennes from November 19 to 21, 2024, the central theme of which is that of artificial intelligence, Thales teams participated in the AID Challenge by distinguishing themselves at second place thanks to the development of a metamodel for detecting images generated by AI. At a time when disinformation is spreading to the media and all sectors of the economy, in light of the generalization of AI techniques, this tool aims to fight against image manipulation for different cases of use such as the fight against identity fraud.

AI-generated images are generated through the use of modern AI platforms (Midjourney, Dall-E, Firefly, etc.). Today, AI technologies have evolved so much that it is almost impossible for the naked eye to distinguish a real image from an AI-generated image. This also applies to video, even in real time. An AI-generated image can therefore constitute an open door for malicious attackers who can use it for identity theft and fraud. Some studies predict that within a few years, deepfakes could cause massive financial losses due to their use for identity theft and fraud. Gartner has estimated that in 2023, around 20% of cyberattacks could include deepfake content as part of disinformation or manipulation campaigns. Their report highlights the rise of deepfakes in financial fraud and advanced phishing attacks.

« The Thales metamodel for detecting deepfakes responds in particular to the problem of identity fraud and the morphing technique. The aggregation of several methods using neural networks, noise detection or even spatial frequencies will make it possible to better secure the increasing number of solutions requiring identity verification by biometric recognition. This is a remarkable technological advance, resulting from the expertise of Thales AI researchers. » specifies Christophe Meyer, Senior AI Expert and Technical Director at cortAIx, Thales’ AI accelerator.

The Thales metamodel draws on machine learning techniques, decision trees, and evaluation of the strengths and weaknesses of each model in order to analyze the authenticity of an image. It thus combines different models, including:

- The CLIP (Contrastive Language–Image Pre-training) method which consists of linking images and text by learning to understand how an image and its textual description correspond. In other words, CLIP learns to associate visual elements (like a photo) with words that describe them. To detect deepfakes, CLIP can analyze images and evaluate their compatibility with descriptions in text format, thus identifying inconsistencies or visual anomalies.

- The DNF method which uses current image generation architectures (“diffusion” models) to detect them. Concretely, diffusion models are based on the estimation of noise to add to an image to create a “hallucination” which will create content from nothing. The estimation of this noise can also be used in the detection of images generated by AI.

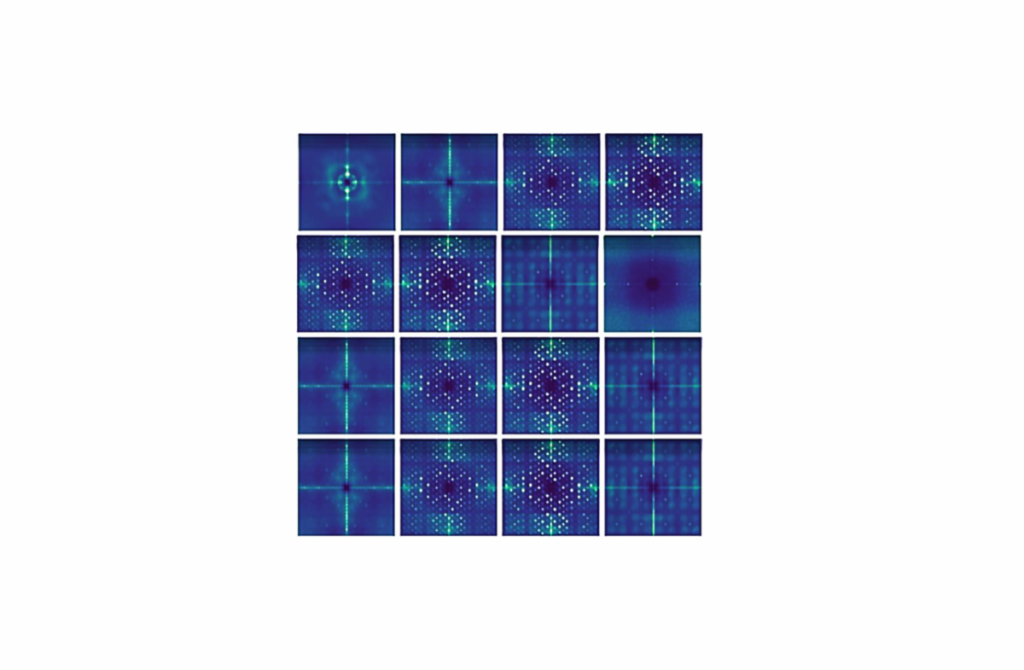

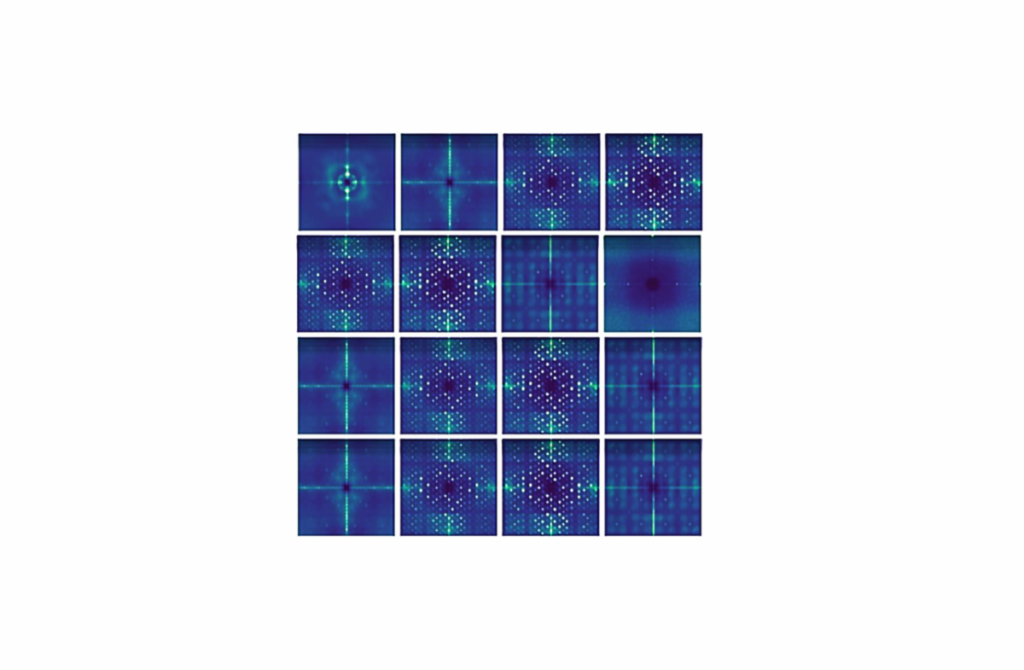

- The DCT (Discrete Cosine Transform) method is based on the analysis of the spatial frequencies of an image. By transforming the image from spatial space (pixels) to frequency space (like waves), DCT can detect subtle anomalies in the structure of the image, often invisible to the naked eye. They appear during the generation of deepfakes.

The Friendly Hackers team behind this invention is part of cortAIx, Thales’ AI accelerator, with more than 600 AI researchers and engineers, including 150 based on the Saclay plateau and working on critical systems. . The Group’s Friendly Hackers have developed a toolbox, the BattleBox, the objective of which is to facilitate the assessment of the robustness of systems integrating AI against attacks aimed at exploiting the intrinsic vulnerabilities of different data models. AI (including Large Language Models), such as adversary attacks or attacks aimed at extracting sensitive information. To deal with attacks, suitable countermeasures, such as unlearning, federated learning, model watermarking, model robustification are proposed.

The Group was a winner in 2023 as part of the CAID (Conference on Artificial Intelligence for Defense) challenge organized by the DGA, aimed at finding certain data used to train AI, including when it had been deleted from the system for preserve their confidentiality.

Distributed by African Media Agency (AMA) for Thales.

About Thales

Thales (Euronext Paris: HO) is a global leader in high technologies specializing in three business sectors: Defense & Security, Aeronautics & Space, and Cybersecurity & Digital Identity.

It develops products and solutions that contribute to a safer, more environmentally friendly and more inclusive world.

The Group invests nearly 4 billion euros per year in Research & Development, particularly in key areas of innovation such as AI, cybersecurity, quantum, cloud technologies and 6G.

Thales has nearly 81,000 employees in 68 countries. In 2023, the Group achieved a turnover of 18.4 billion euros.

CONTACT

Relations presse

[email protected]

Source : African Media Agency (AMA)

2024-11-22 13:01:00

#Thales #Friendly #Hackers #invent #metamodel #detecting #images #produced #deepfakes #

What specific challenges does Thales face in staying ahead of evolving AI technologies used for creating deepfakes?

**Interview with Christophe Meyer, Senior AI Expert and Technical Director at cortAIx, Thales**

**Interviewer:** Christophe, congratulations on Thales achieving second place in the AID Challenge with your innovative metamodel for detecting AI-generated images! Can you tell us more about what led to the development of this metamodel?

**Christophe Meyer:** Thank you! The goal behind the metamodel was to tackle the growing concerns surrounding identity fraud and misinformation driven by AI-generated content, particularly deepfakes. As you know, the rapid advancement of AI technologies has made it increasingly difficult for individuals to differentiate between real and artificially created images or videos. We wanted to address this challenge by providing a robust solution that can analyze the authenticity of such content.

**Interviewer:** That sounds vital, especially in today’s digital landscape. How does the metamodel work to detect deepfakes and other AI-generated images?

**Christophe Meyer:** The metamodel utilizes an aggregation of various detection techniques. Each model contributes to assigning an authenticity score to images based on different analysis methods. For instance, we leverage the CLIP method to correlate visual data with textual descriptions, which helps us identify inconsistencies. Additionally, we use diffusion models to estimate noise characteristics that can signal AI-generated content. the DCT method allows us to examine spatial frequencies and detect subtle anomalies that might go unnoticed by the human eye.

**Interviewer:** It sounds like a multi-faceted approach! What are some implications of this technology for industries or individuals?

**Christophe Meyer:** This technology can have far-reaching implications. For industries relying on identity verification, such as banking, healthcare, and travel, our metamodel enhances security against identity theft. For individuals, it could build greater trust in online content, which is crucial given the rise of fake news and misinformation campaigns. The ability to quickly and accurately assess the authenticity of images can help mitigate the risks associated with deepfakes in various sectors.

**Interviewer:** With disinformation becoming a significant threat, how does Thales plan to continue evolving its solutions in response to these challenges?

**Christophe Meyer:** Our ongoing research and investment in AI are fundamental to our strategy. We aim to refine our detection capabilities, perhaps integrating more advanced machine learning techniques as they emerge. We’re committed to collaborating with other experts in the field and continually engaging with the challenges presented by evolving AI technologies to ensure that our solutions stay effective against new threats.

**Interviewer:** Thank you for sharing your insights, Christophe! It’s great to see Thales at the forefront of combating such critical issues with technology.

**Christophe Meyer:** Thank you for having me! We’re excited about the future and our role in creating a more secure digital environment.