Depression is a prevalent mental illness that afflicts an estimated 280 million people globally. In response to this urgent public health challenge, researchers at Kaunas University of Technology (KTU) have unveiled a groundbreaking artificial intelligence (AI) model designed to identify depression through a comprehensive analysis of both speech patterns and neural brain activity. This innovative multimodal strategy synthesizes two distinct types of data, providing a more nuanced and objective evaluation of an individual’s emotional well-being. This advancement may signify a pivotal shift in the landscape of depression diagnosis.

Depression is one of the most common mental disorders, with devastating consequences for both the individual and society, so we are developing a new, more objective diagnostic method that could become accessible to everyone in the future.”

Rytis Maskeliūnas, professor at KTU and one of the authors of the invention

Impressive accuracy using voice and brain activity data

This combination of speech and brain activity data achieved an impressive 97.53 per cent accuracy in diagnosing depression, significantly outperforming alternative methods. “This is because the voice adds data to the study that we cannot yet extract from the brain,” explains Maskeliūnas.

Maskeliūnas emphasises that the used EEG dataset was obtained from the Multimodal Open Dataset for Mental Disorder Analysis (MODMA), as the KTU research group represents computer science and not the medical science field.

MODMA EEG data was collected and recorded for five minutes while participants were awake, at rest, and with their eyes closed. In the audio part of the experiment, the patients participated in a question-and-answer session and several activities focused on reading and describing pictures to capture their natural language and cognitive state.

AI will need to learn how to justify the diagnosis

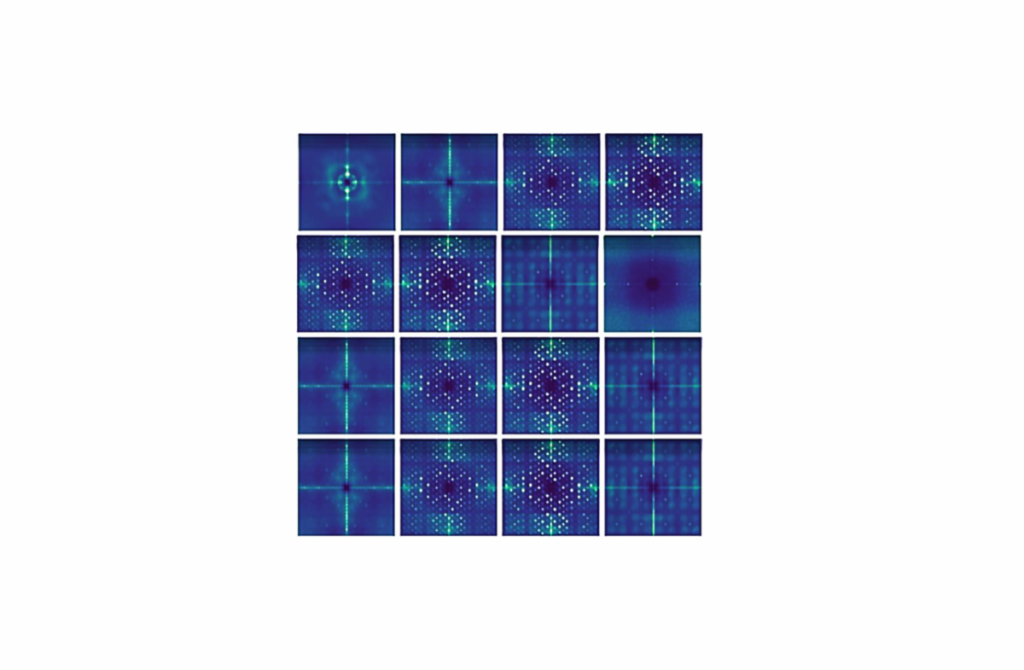

The collected EEG and audio signals were transformed into spectrograms, allowing the data to be visualised. Special noise filters and pre-processing methods were applied to make the data noise-free and comparable, and a modified DenseNet-121 deep-learning model was used to identify signs of depression in the images. Each image reflected signal changes over time. The EEG showed waveforms of brain activity, and the sound showed frequency and intensity distributions.

In the future, this AI model could speed up the diagnosis of depression, or even make it remote, and reduce the risk of subjective evaluations. This requires further clinical trials and improvements to the programme.

“The main problem with these studies is the lack of data because people tend to remain private about their mental health matters,” he says.

Another important aspect mentioned by the professor of the KTU Department of Multimedia Engineering is that the algorithm needs to be improved in such a way that it is not only accurate but also provides information to the medical professional on what led to this diagnostic result. “The algorithm still has to learn how to explain the diagnosis in a comprehensible way,” says Maskeliūnas.

According to a KTU professor, due to the growing demand for AI solutions that directly affect people in areas such as healthcare, finance, and the legal system, similar requirements are becoming common.

This is why explainable artificial intelligence (XAI), which aims to explain to the user why the model makes certain decisions and to increase their trust in the AI, is now gaining momentum.

Source:

Journal reference:

Yousufi, M., et al. (2024). Multimodal Fusion of EEG and Audio Spectrogram for Major Depressive Disorder Recognition Using Modified DenseNet121. Brain Sciences. doi.org/10.3390/brainsci14101018.

AI Diagnosis: The New Therapist in Town

Ah, depression – the silent assassin of joy that’s all too common these days, affecting a staggering 280 million people worldwide! And while it’s no laughing matter, the research from Kaunas University of Technology is trying to add some levity to the gloom with an AI model that identifies depression by analyzing speech and brain neural activity. Yes, folks, it seems we’ve reached a point where even our emotions must submit to the judgement of artificial intelligence!

“We are developing a new, more objective diagnostic method that could become accessible to everyone in the future.”

— Rytis Maskeliūnas, Professor at KTU

Now, let’s face it. Our traditional methods of diagnosing depression have often resembled a game of charades, but the genius boffins at KTU want to up the ante. Why rely on just one type of data when you can combine the golden duo of speech and brain activity? It’s like making a cocktail – sometimes you need a splash of this and a dash of that to get the right mix. And the result? An astounding 97.53% accuracy in diagnosing depression! Take that, previous methods – you’ve officially been outperformed!

The Impressive Duo: Voice and Brain Activity

So how do they achieve such accuracy? Well, according to Maskeliūnas, voice data adds layers to the diagnostics that the brain activity alone simply cannot reveal. Much like your mate who won’t stop talking about their cat – sometimes, you just need additional context to understand the big picture!

But before you think, “Oh great! Now an AI will know I’m sad before I do!” – fear not. Privacy is still a priority! Face data might reveal how severe a person’s emotional state is, but the researchers are steering clear of facial recognition technology that can violate privacy. Instead, they’re focusing on synthesizing data from sources that, although intrusive, are also a bit less creepy.

From Brain Waves to AI Waves

In a fascinating turn of events, the team has transformed EEG and audio signals into visual spectrograms, sort of like how modern art turns a few brush strokes into a pricey exhibit! They’ve seized this data and entangled it with a modified DenseNet-121 deep learning model to identify signs of depression. Each input image? A mirror reflecting the mind’s waves and the sweet symphony of speech.

However, the process isn’t all sunshine and rainbows. There’s the nagging issue of data deficiency; people are understandably tight-lipped about their mental health, making it tougher to gather enough meaningful data. It’s like trying to run a deli in a vegan commune – you’re going to get some raised eyebrows.

The Quest for Understandable AI

And here’s the kicker: This AI model must learn not only to diagnose but also to justify its decisions. I mean, let’s be real; no one wants to hear “You’re depressed!” from a robot without a solid reason. Explainable AI—yep, that’s a thing—is gaining traction to ensure that our silicon pals can elucidate their thought processes. After all, we prefer our therapists to give us some insight into why we’re feeling down, rather than an enigmatic shrug and a withdrawal of service!

As Maskeliūnas muses, the growing demand for AI solutions in healthcare, finance, and law is paving the way for explainable AI. We want our machines to not just make decisions, but to share the reasoning behind those decisions. It’s like having a personal trainer who yells at you for eating that donut: “You can’t just tell me to stop, mate! I need a full instructional manual!”

Conclusion: A Glimmer of Hope in a Gloomy Outlook

So, while we navigate the murky waters of mental health diagnosis, it seems KTU is steering us toward a brighter future. A future where diagnosing depression might just be as simple as answering a few questions – and without a hefty bill at the end. But for now, I’ll be keeping an eye on my AI therapist. Because let’s face it, if it starts suggesting I watch cat videos, I might need to worry!

For those of you hoping to leverage this innovation, remember: mental health is important, and if an AI can make it easier to diagnose, then maybe, just maybe, we’ve unlocked a new tool in the battle against the dark clouds of depression.

Source:

Journal reference:

Yousufi, M., et al. (2024). Multimodal Fusion of EEG and Audio Spectrogram for Major Depressive Disorder Recognition Using Modified DenseNet121. Brain Sciences. doi.org/10.3390/brainsci14101018.

How can AI systems be developed to ensure they provide clear rationales for their conclusions in mental health assessments?

Ce, and law underscores the need for transparent and explainable systems. No one wants a mysterious, all-knowing AI passing judgment on their mental state without some clear rationale to back it up. Thus, developing an AI that can articulate the reasons behind its conclusions is paramount.

the advancements at Kaunas University of Technology represent a promising step forward in identifying and diagnosing depression with greater accuracy. By harnessing the power of AI to analyze both speech and brain activity, researchers are paving the way for a future where mental health diagnoses could become more accessible and reliable. If successful, this technology could revolutionize the way we understand and treat mental health disorders, making the journey toward emotional wellness just a little bit clearer.