2024-06-21 09:24:58

The frenzied buy of NVIDIA GPUs by international companies and governments might also be coming to an finish.

As a result of one thing fascinating is going on.

Karakuri’s LL.M. introduced yesterday, “KARAKURI LM 8x7B Information v0.1” is a really environment friendly Japanese legislation grasp. Karakuri took the trade by storm when it launched the extraordinarily high-performance 70B mannequin in January this 12 months. This newest LLN is the primary open LLM to regulate “instruction execution” for Japanese. As well as, there’s a know-how referred to as RAG that may mix a number of information to derive a solution nearer to the right reply, and a know-how referred to as Operate Calling that enables LLM to command exterior info assortment to complement lacking information as wanted. and calculations.

SoftBank Instinct has additionally not too long ago65B Scale Open Supply Grasp of Legal guidelineshowever even in comparison with this, the present “KARAKURI LM 8x7B Instruct v0.1” stands out by one or two heads.

Notably, the calculated value of creating the Karakuri Grasp of Legal guidelines, which has the best efficiency in Japanese, is alleged to be solely 750,000 yen. 7.5 million yen might also be a mistake (in truth, the 70B mannequin launched in January was mentioned to value round 10 million yen), however it’s nonetheless an overwhelmingly low value. NVIDIA’s GPU H100 at the moment sells for greater than 5 million yen.

So how did Karakuri create the company LLM with the best Japanese scores with such a low price range? That is as a result of it would not use NVIDIA’s GPU.

Karakuri makes use of Trainium, a specialised synthetic intelligence studying chip developed by Amazon.

Though it’s a surprisingly small chip that few folks learn regarding, Amazon has launched a studying software program package deal for Trainium, and Karakuri’s weblog additionally offers an in depth “recipe on methods to create an LLM.”

Now firms all over the world are being instructed to create LLMs, however as everyone knows, plenty of NVIDIA GPUs are an absolute necessity.

NVIDIA was a monopoly that finally turned essentially the most worthwhile firm on this planet. Nonetheless, this second would be the pinnacle of their glory.

Moreover, Amazon is creating Inferentia2 as a devoted inference chip, and actually, Karakuri’sDemonstration web siteAnybody can expertise the efficiency of this LLM and Amazon’s Inferentia2 chips. It produces outcomes as quick as GPT-4o and is fairly good, to say the least.

Much more stunning is the announcement regarding two weeks in the pastBlended preparationsThere’s a paper referred to as “Synthesis of Brokers”.

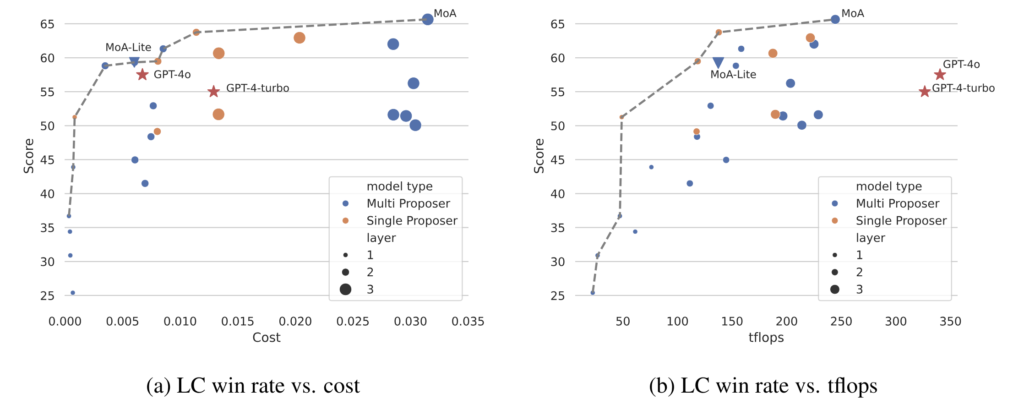

The paper claims that by merely utilizing a mixture of eight publicly obtainable open LLMs, benchmark outcomes obtained exceed the efficiency of GPT-4o alone.

Now that the supply code is out, I am not shocked by most issues, however I am nonetheless shocked.

To summarize the paper, we mixed 4 to six open LLMs, splitting every LLM into “proposers” and “aggregators” of a query, saying that by dialogue, the standard of the solutions in the end exceeded GPT-4o.

As well as, by combining LLM, two new fashions are proposed: MoA-Lite, which has the identical efficiency as GPT-4o however a lot larger computational effectivity; MoA, which has decrease computational complexity and better efficiency than GPT-4o.

Relying on the kind of LLM you need to mix, there are LLMs for proposers and LLMs for aggregators, and this text additionally consists of notes on these combos.

What surprises me most, nonetheless, is that that is simply the physique of this system that achieves this wonderful value/efficiency and efficiency enchancment (https://github.com/togethercomputer/MoA/blob/Excerpted from. Writer feedback)

eval_set = datasets.Dataset.from_dict(knowledge)

with console.standing(“[bold green]Question all fashions…”) as standing: #Question all fashions…”) as standing: #Question all fashions…

for i_round in vary(rounds): # Repeat a number of occasions

eval_set = eval_set.map(

a part of(

process_fn, #process_fn simply lets the mannequin infer

temperature = temperature,

max tokens = max tokens,

),

batch=false,

variety of processes = variety of processes,

)

References = [item[“output”] for gadgets in eval_set]

knowledge[“references”] = References

eval_set = datasets.Dataset.from_dict(knowledge)

defject_references_to_messages(

message,

seek advice from,

):

message = copy.deepcopy(message)

#This tip summarizes the opinions obtained from a number of LLMs and attracts conclusions.

system = f”””You will have obtained a set of responses to a latest consumer question from varied open supply fashions. Your process is to synthesize these responses right into a high-quality response. It’s essential to critically consider these responses info supplied, recognizing that a few of it might be biased or incorrect.

Mannequin’s response: “””

“”You can be supplied with a set of responses to fashionable consumer queries from varied open supply fashions. Your job is to synthesize these responses right into a single high-quality response. It is very important understand that the informative solutions supplied in these might have bias or inaccuracy, you need to subsequently present full, correct and complete responses to directions, making certain that your responses are nicely structured, constant and cling to the best requirements of accuracy and reliability.

Mannequin response: “”””

For i, the reference (reference) within the enumeration:

if message[0][“role”] ==”system”:

message[0][“content”] += “nn” + system

Others:

return message

All the pieces right here belongs to it.

Sakana.ai introduced final weekdisco popTo my shock, the host is nearly immediate, however it’s even easier than that.

Whereas the world’s largest firms waste a whole lot of billions and even trillions of yen on GPUs, highly effective firms try to make important progress by easy combos of current applied sciences.

It is an fascinating second.

I additionally plan to attempt combining a Japanese Open LL.M. with hybrid company.

It will be fascinating to mix this with DiscoPOP and let GPT or LLM consider the perfect mixture of Japanese LLM.

In any period, folks with out knowledge can solely waste cash.

1718970282

#Cooling #fierce #LLM #growth #competitors #mixture #open #fashions #surpass #GPT4o #WirelessWire #Information